The soft mechanical whir of servos tuning, followed by an impossibly complex Bach fugue played with inhuman precision – this isn't science fiction anymore. Across the globe, a quiet revolution is unfolding as Instruments Robots, engineered marvels merging advanced robotics, AI, and deep musical understanding, are mastering traditional instruments like pianos, guitars, and violins with astonishing skill. This journey explores the intricate world beyond mere novelty, revealing how these machines are evolving from programmed players into collaborative musicians capable of technique, expression, and even surprising moments of interpretative genius, fundamentally reshaping our perception of musical possibility and blurring the line between algorithm and artistry.

From Automata to Virtuosi: The Evolution of Robotic Musicians

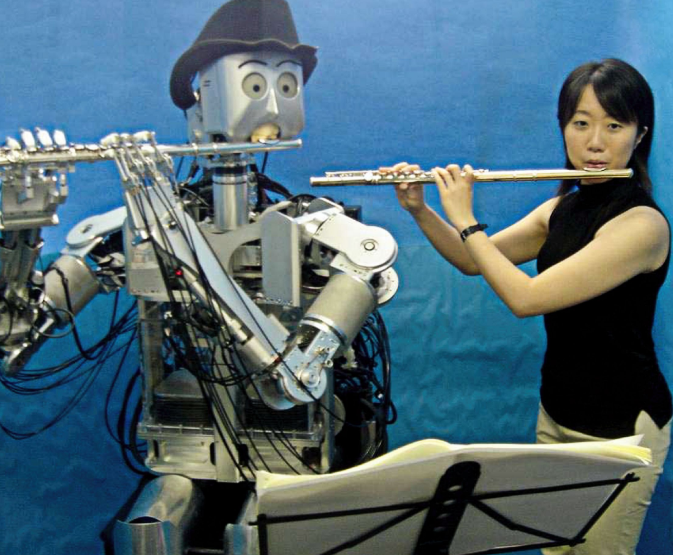

The concept of machines creating music isn't new. For centuries, ingenious clockmakers crafted intricate automata – mechanical dolls designed to mimic the actions of musicians playing flutes, organs, or harpsichords. While these marvels of their time demonstrated mechanical ingenuity, their performances were rigidly predetermined, locked into repeating loops driven by pinned cylinders or punched cards. They simulated playing rather than understanding music itself. The true evolution towards today's Instruments Robots began with the rise of computer control and, crucially, artificial intelligence.

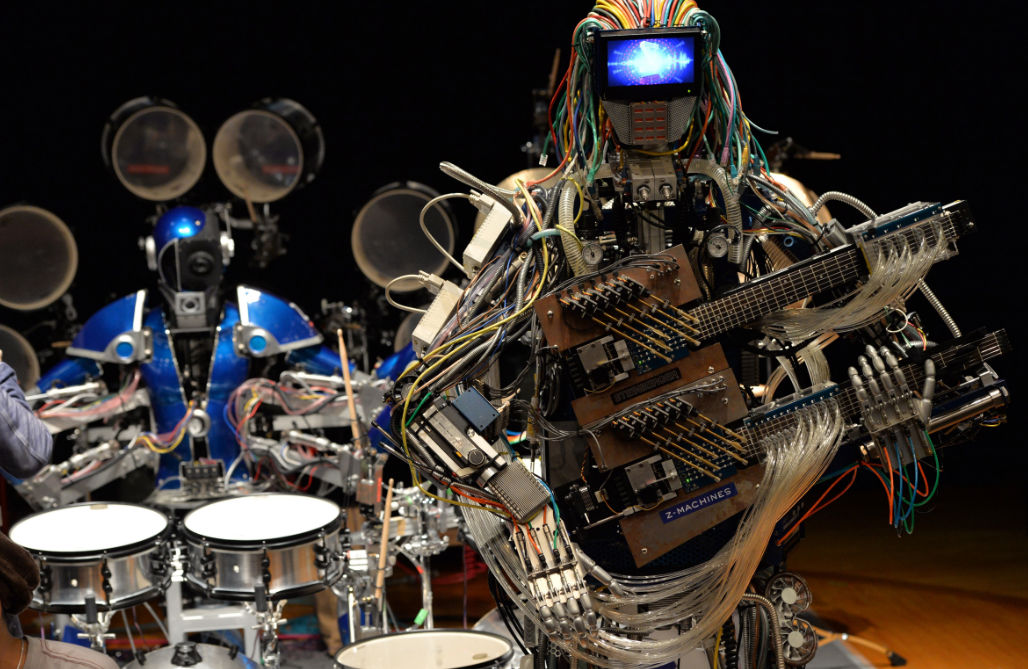

Modern Instruments Robots represent a quantum leap beyond their clockwork ancestors. They combine:

Precision Robotics

Advanced manipulators and actuators engineered for speed, control, and nuance, capable of striking piano keys with calibrated force, precisely fingering guitar frets, or bowing violin strings with varying speed and pressure.

Machine Perception

Sensors (cameras, microphones, force feedback) allowing them to 'sense' their instrument and environment, enabling dynamic adjustments and responsiveness during performance.

Artificial Intelligence

The brain behind the operation. AI algorithms power complex capabilities like music transcription, style analysis, improvisation, emotional contour generation, and real-time adaptation to other musicians or the acoustics of a space. It moves the robot beyond playback into the realm of interpretation and creation.

This fusion transforms robotic players from static operators into dynamic musical agents. They are no longer simply mimicking *human* actions; they are developing new ways to interact with musical instruments informed by the physical constraints of the machine, the acoustic properties of the instrument, and the computational possibilities of AI.

Discover the trailblazing technology reshaping artistic creation: Musical Instrument Robots: The AI-Powered Machines Redefining Music's Creative Frontier.

Decoding Genius: The Technology Empowering Instruments Robots

Beneath the graceful movements of a robotic pianist lies a symphony of sophisticated technology. Creating machines that navigate the physical and expressive challenges of real instruments requires breakthroughs across multiple fields:

Robotics: Precision Beyond Physiology

Designing robotic limbs to perform actions as delicate as depressing a violin string or as forceful as striking a drum head with variable intensity demands exceptional engineering. Materials science plays a crucial role, utilizing alloys and composites to create structures that are lightweight yet rigid enough for precise control. The actuators (muscles) driving these limbs – often highly dynamic servos, stepper motors, or pneumatic systems – must respond instantly with smooth velocity and torque curves. Force/torque sensors embedded in fingers or effectors provide real-time feedback, allowing the robot to adjust its touch instantaneously, crucial for avoiding broken strings on a guitar or creating a smooth *legato* on the piano. Vision systems, ranging from simple positional tracking to complex 3D spatial mapping, guide limbs to precise points on the instrument, compensating for minute movements or variations.

AI: The Creative & Interpretative Engine

Robotics provides the physical capability, but AI imbues Instruments Robots with musicality. Core AI techniques include:

Machine Learning for Music Analysis: Systems are trained on vast libraries of sheet music and audio recordings to understand structure (melody, harmony, rhythm), identify stylistic nuances (rubato, articulation, dynamics), and recognize compositional signatures.

Generative AI: Algorithms like LSTMs or Transformers enable robots to compose original pieces or generate plausible, stylistically appropriate improvisations based on learned patterns, pushing beyond mere reproduction.

Reinforcement Learning: Robots can 'learn' how to play better through trial and error, optimizing their movements and interpretations based on feedback regarding sound quality or adherence to stylistic goals.

Emotional AI Modeling: Some advanced systems attempt to algorithmically model emotional contours within music, adjusting parameters like tempo, articulation, and dynamics to evoke specific feelings or respond to the perceived emotional intent of a human collaborator.

Control Systems: The Conductor of Complexity

The robot's control architecture is its central nervous system. It integrates real-time data streams from sensors (where is my finger now? how much force is the string exerting? is the pitch correct?), processes this information using the AI's musical directives (play this note with a *forte-piano* attack, apply vibrato here), and sends instantaneous commands back to the actuators. This high-bandwidth feedback loop must operate with near-zero latency to create seamless, expressive performances. Modern systems often employ hierarchical control, with low-level loops managing precise motor positions and forces, while high-level AI dictates the overall musical expression.

Mastering the Craft: Real-World Breakthroughs in Instrument Robotics

Beyond theoretical research, concrete projects showcase the remarkable capabilities evolving within the field:

Shimon the Marimba Player (Georgia Tech): More than just a virtuoso player, Shimon is a collaborative improviser. Using deep learning models trained on decades of jazz, rock, and classical music, Shimon listens to a human quartet in real-time, understands the harmonic and rhythmic structure unfolding, and generates complementary, musically coherent improvisations of its own. It doesn't just play back; it dialogues.

Trifon-like Piano Robots: Inspired by human virtuosos like Daniil Trifonov, advanced piano robots go beyond technical perfection. Using AI that analyzes human performance nuances (micro-variations in timing, complex pedaling, expressive dynamic shading), these systems aim to replicate not just the notes, but the profound musicality and emotional depth characteristic of the world's greatest pianists. The goal is not replacement, but achieving a level of interpretative expression previously reserved for humans.

The Anthropomorphic Violinist: Building a robot to play the violin is arguably one of the most formidable challenges. The instrument requires incredibly fine motor control of the bow (pressure, speed, contact point, angle) combined with precise finger placement and vibrato. Advanced robotic violinists use sophisticated force control in the bowing arm and dexterous multi-fingered hands capable of nuanced vibrato techniques learned by analyzing masters like Hilary Hahn.

Guitar Hero Meets Real Guitar: Robots like those developed at the University of Tokyo tackle the guitar's complex physical interactions. Advanced manipulation allows for precise fretting, smooth string bending, intricate hammer-ons and pull-offs, and picking techniques ranging from aggressive strumming to delicate fingerpicking. This highlights the engineering challenge of replicating not just single points of contact, but complex interactions across the entire instrument.

Ensemble Leadership: Pushing collaboration further, some research explores robots leading ensembles. An Instruments Robot equipped to follow or set tempo, cue entrances, and dynamically adjust phrasing based on the group's performance acts as a conductor-interpreter, showcasing the potential for robots to become active musical directors.

Explore the transformative potential: From Circuits to Cadenzas: How AI-Powered Robots Are Shattering Music's Glass Ceiling.

The Maestro Debate: Artistic Merit and the Future of Performance

The rise of Instruments Robots inevitably sparks profound questions about creativity, artistry, and the role of human musicians:

Authenticity and Expression

Can a machine's interpretation ever be considered truly "authentic" without lived human experience informing its choices? Proponents argue that expression can manifest through complex algorithms mimicking emotional contours; detractors insist that music created without sentience remains mimicry. However, performances like Shimon's improvisations challenge this dichotomy, suggesting a new form of computational co-creativity.

The Future Role of Human Musicians

Rather than replacing humans, Instruments Robots are more likely to become collaborative partners, practice tools, and educators. They might perform repertoire requiring superhuman precision, accompany musicians in rehearsal 24/7, enable composers to hear complex works instantly with impeccable execution, or introduce children to instruments in entirely new ways. Human musicians may increasingly focus on areas robots find elusive: pure creative impulse, deep emotional connection with an audience, and the unpredictable spark of live human interaction.

Technical Excellence vs. Intangible Magic

Robots can achieve staggering technical perfection—flawless pitch, relentless tempo, consistent articulation. Yet, the perceived "soul" or "magic" of a human performance often lies in slight imperfections, hesitations, vulnerabilities, and the shared human context. Does robotic perfection illuminate the unique value of human expressiveness, or will machines eventually close this gap entirely through AI that models and generates convincing emotional narratives?

New Paradigms and Compositions

Instruments Robots don't need to replicate *only* human techniques. They can explore new sonic territories based on their unique physical capabilities: speeds, articulations, or combinations physically impossible for humans. Composers are already beginning to write pieces specifically designed to exploit these unique robotic capabilities, pioneering a genre uniquely suited to the machine musician, expanding the sonic palette of music itself.

Beyond the Stage: The Broader Impact of Instrument Robotics

The influence of Instruments Robots extends far beyond potential concert hall performances:

Revolutionizing Music Education and Pedagogy

Robots offer tireless, infinitely patient practice partners. Imagine a piano robot capable of playing the accompanist part for a concerto at any tempo, perfectly synced to a student's learning speed. AI-driven analysis could provide immediate, objective feedback on technique and interpretation, complementing human teachers. They make complex ensemble practice accessible at any time.

Preserving and Reproducing Legacy Performances

Through detailed analysis of historic recordings and AI modeling, Instruments Robots could allow us to experience how legendary musicians like Rachmaninoff might have interpreted pieces in a modern acoustic space, preserving nuances that sheet music alone cannot capture. This offers a powerful tool for musicological research and auditory heritage preservation.

Music Therapy and Accessibility

For individuals with physical limitations, robotic interfaces could be tailored to enable musical expression through adaptive controllers or simplified gestures that trigger complex performances. Robots could provide personalized, interactive musical therapy sessions calibrated for specific therapeutic goals.

New Frontiers for Composers

Composers gain access to a "performer" capable of executing unimaginable feats of speed, complexity, precision, and polyphony instantly. This liberates the compositional imagination, enabling the creation of works that were previously unplayable, pushing the boundaries of written music.

Frequently Asked Questions about Instruments Robots

This is the central debate. While robots excel at analyzing patterns and replicating expressive techniques learned from vast musical datasets, the question of whether their output carries the same emotional authenticity as human performance remains deeply philosophical. The AI interprets data points associated with "sadness" or "joy" in music, algorithms controlling dynamics and articulation to match. What robots currently deliver is a sophisticated simulation of emotional expression, derived computationally. However, the *audience's* perception of emotional resonance is undeniably real during compelling robotic performances, blurring the lines of definition.

Widespread replacement of human musicians in traditional ensembles seems unlikely in the near to mid-term for artistic, social, and economic reasons. However, Instruments Robots will disrupt the industry in other significant ways: they may handle repetitive performances (e.g., theme parks, background music), become invaluable practice tools and accompanists, perform complex contemporary pieces demanding inhuman precision, open new compositional possibilities, and make ensemble playing accessible in underserved areas. Human musicians will likely adapt, focusing on uniquely human aspects like stage presence, spontaneity, and deeper emotional storytelling, while potentially collaborating *with* robots as partners in novel performances.

Several hurdles persist. Dexterity remains a major one; replicating the subtle finesse of a pianist's touch or the complex coordinated motion of a violinist's bow hand requires immense engineering and sensor feedback sophistication. Achieving true real-time, *musically intelligent* interaction and improvisation that feels deeply collaborative requires advancements in multi-modal AI (processing audio, gesture, and potentially emotional cues simultaneously). Modeling and generating genuinely novel musical ideas that move beyond learned patterns to something uniquely "robotic" is an ongoing AI research challenge. Finally, cost remains prohibitive, limiting access primarily to research labs and specialized artists.

Advanced systems use multiple methods. They can be directly programmed with precise motor instructions based on sheet music (MIDI data). More sophisticated approaches involve machine learning: robots are fed audio recordings and corresponding sheet music, learning to map sound to playing technique. Some can even "listen" to an audio piece and generate their own interpretation through AI music transcription and subsequent analysis to determine likely playing methods – a process analogous to how human musicians learn by ear, albeit computationally derived. Reinforcement learning allows them to refine interpretations based on sound feedback.

Copyright law currently centers on human authorship. The legal landscape surrounding purely AI-generated creative works is still evolving rapidly and varies significantly by jurisdiction. Typically, the copyright could rest with the programmer(s) who designed the AI, the operator who initiated the specific creative process, the owners of the robot, or it might be considered non-copyrightable in the absence of direct human creative control. Clear legal precedents are still being established for this novel category of creation, posing significant challenges for artists, composers, and tech companies.

The Encore: Embracing a Harmonious Future with Robotic Virtuosos

The advent of sophisticated Instruments Robots marks not an end to human musical expression, but the opening of a fascinating new movement. These machines compel us to re-examine the essence of musical artistry – is it solely bound to biology and consciousness, or can it emerge from the interplay of sophisticated algorithms and precision engineering capable of manipulating traditional instruments? Instead of viewing them as competitors, the most transformative path lies in embracing Instruments Robots as collaborators and innovators.

They offer unparalleled technical mastery, tireless performance capability, and the potential for radically new sonic explorations born from their unique physical interfaces with instruments. They democratize complex ensemble practice and offer powerful pedagogical tools. While the debate surrounding authentic emotional expression will persist, the undeniable fact is that these robots *are* capable of creating compelling and sometimes profoundly beautiful musical experiences. They invite us to expand our understanding of what music can be and who (or what) can create it. As Instruments Robots continue their evolution from mimicking fingers to generating interpretation, they stand poised as AI-powered prodigies, ready to co-write the next chapter of musical history.