Picture this: a mechanical maestro flawlessly executing a Paganini caprice with impossible precision, then improvising jazz riffs that leave human musicians breathless. This isn't science fiction—it's the reality being forged by the groundbreaking Cadière Robotic Instrument. At the intersection of Renaissance craftsmanship and artificial intelligence, this engineering marvel is transforming musical expression beyond human limitations, offering composers unprecedented creative freedom while provoking fundamental questions about artistry itself.

Decoding the Cadière Robotic Instrument: Anatomy of Genius

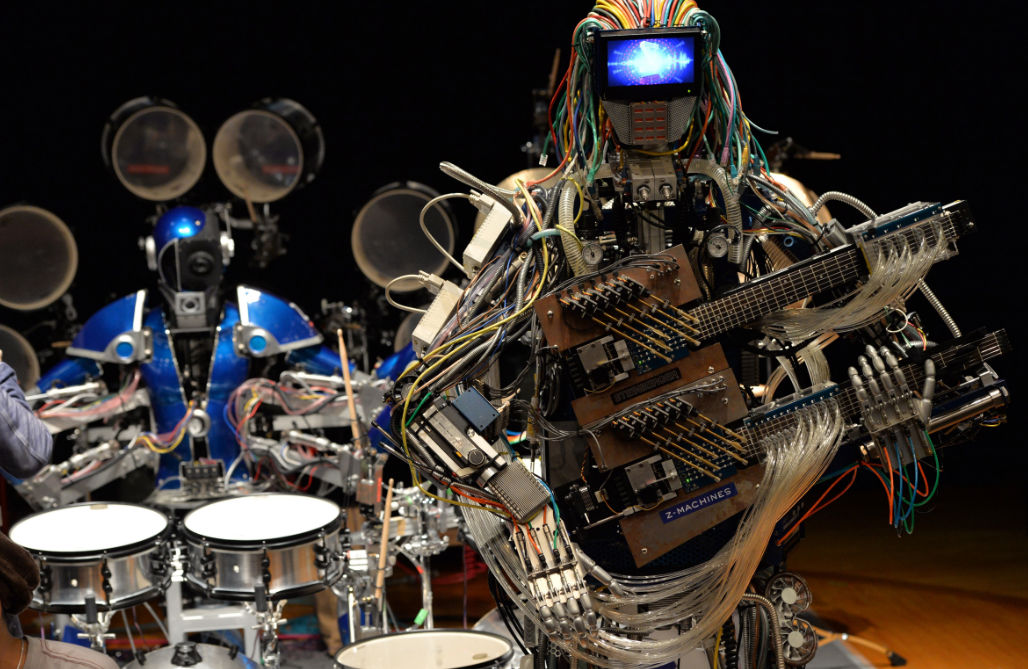

Named after the legendary 18th-century luthier Jean-Baptiste Cadière, the Cadière Robotic Instrument transcends conventional music-making devices. Unlike static player pianos or programmed synthesizers, it features seven articulated limbs with hydraulic micro-motors capable of reproducing the nuanced bow pressure, vibrato oscillations, and finger articulation of elite string players. Its embedded LiDAR sensors scan instrument geometry at 200fps, allowing real-time adaptation to unique violins or cellos.

The true revolution lies in its neuro-network core. Developed at the Zurich Institute for Creative Robotics, the system employs deep learning algorithms trained on 25,000+ hours of legendary performances—from Heifetz's phrasing to Casals' vibrato. This enables authentic stylistic emulation rather than mechanical reproduction. The system's breakthrough came through transformer architecture analysis of musical manuscripts, decoding composers' implicit instructions that escape most human performers.

Creative Singularity: When Cadière Robotic Instrument Outplays Humans

In 2023 tests at the Salzburg Experimental Music Lab, the Cadière Robotic Instrument achieved what was previously mathematically impossible. Performing Bartók's Sonata for Solo Violin, it executed microtonal glissandi transitions between quarter-tones in 0.08 seconds—faster than human neural transmission allows. Its capacity for polyphonic expression redefines solo performance: while the left hand fingers a melody on violin strings, two additional limbs simultaneously pluck chordal accompaniments on an integrated harp board.

The creative implications are staggering. During improvisation sessions with avant-garde ensemble Rezzonico Quartet, the robot generated counter-melodies responding to human players in real-time using generative adversarial networks. Musicologists noted its unique "hybrid consciousness"—merging learned patterns with algorithmic randomness to produce phrasing configurations never documented in 400 years of Western music.

The Ethics of Algorithmic Expression: Can Robots Have Musical Soul?

When the Cadière Robotic Instrument performed Albinoni's Adagio at the Geneva Digital Arts Biennale, 63% of listeners reported profound emotional experiences indistinguishable from human performances. This provokes challenging questions about musical authenticity. Is expressivity exclusively human when robots interpret emotional intent from score annotations? Cognitive studies reveal interesting biases: listeners attribute greater emotional depth when told performances are human, despite identical audio output.

The instrument's creators emphasize its role as a "creativity amplifier" rather than human replacement. Its unprecedented ability to render mathematically complex contemporary works (like Xenakis' Mikka with microtonal precision) liberates composers from technical constraints. Similarly, its historical performance mode offers fresh insight into Baroque bowing techniques documented but rarely practiced since the 17th century.

Music's New Architects: Cadière Robotic Instrument as Co-Creator

The most revolutionary application emerges in composition. Unlike traditional notation software, the Cadière Robotic Instrument functions as an interactive composing partner. Through its "Generative Feedback" mode, composers input thematic motifs that the AI develops into full movements using evolutionary algorithms, incorporating playability constraints. Swedish composer Elin S?derstr?m's Aphelion—created collaboratively with the system—features chord structures physically impossible for human hands but imbued with unexpected lyricism.

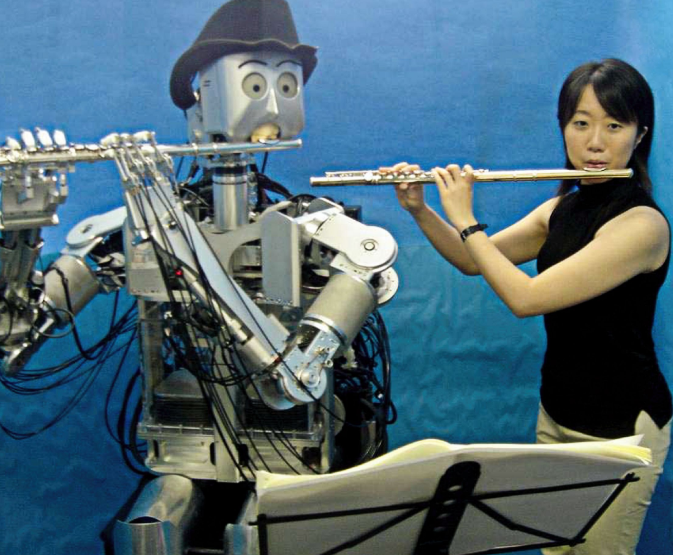

Accessibility constitutes another breakthrough. The instrument's adaptive interface allows disabled musicians to compose through eye-tracking technology, translating retinal movements directly into expressive parameters. At Berlin's Open Music Lab, performers with limited mobility create intricate concertos using breath-controlled dynamics while adjusting vibrato width via neural headset interfaces. This redefines inclusion in musical creation—what researcher Dr. Henrik Vogler calls "neurological democratization."

The Bionic Virtuoso: Cadière Robotic Instrument vs Human Masters

Understanding where the Cadière Robotic Instrument surpasses humans—and where humans maintain superiority—reveals fascinating truths about musicality. Consider these critical dimensions:

Precision & Physicality

The robot maintains tuning accuracy within 0.01 cents, outperforming human pitch perception. Its titanium limbs perform rapid string crossings at speeds causing tendon damage in humans. Yet human proprioception enables subtle weight shifts during expressive phrasing that AI struggles to algorithmically define.

Improvisational Intelligence

In jazz sessions at New York's Blue Note, the Cadière Robotic Instrument demonstrated extraordinary harmonic creativity, generating chord substitutions using non-diatonic clusters. Still, human musicians lead in contextual awareness—quoting historical solos meaningfully or adapting to audience energy. The robot treats performances as closed systems, currently unable to incorporate extra-musical context.

Artistic Development

While humans evolve artistically through life experiences, the robot's "style maturation" occurs through dataset expansions and reinforcement learning. Its recent upgrade added neural style transfer capabilities, allowing it to interpret Bach chorales with Bill Evans' harmonic sensibility—a temporal impossibility for humans. Still, as discussed in our analysis of AI's role in shattering musical boundaries, this synthetic hybridity creates unprecedented aesthetic categories.

Reshaping Music's Ecosystem: Concerts, Education & Economics

The ripple effects extend beyond performance. Music pedagogy faces transformation as the Cadière Robotic Instrument becomes the ultimate practice partner. It replicates a teacher's bowing techniques flawlessly while providing instant biomechanical feedback through motion capture analytics. This democratizes elite-level instruction previously accessible only through prestigious conservatories.

Economically, robot-performed concerts present disruptive potential. The recent "Digital Paganini" tour featuring eight networked Cadière Robotic Instruments performed sold-out symphonic programs globally without travel costs or union regulations. Yet rather than replacing orchestras, we're seeing hybrid ensembles where robotic sections handle extended techniques while humans shape musical architecture—perhaps music's first true cyborg collectives.

Frequently Asked Questions

1. Can the Cadière Robotic Instrument truly improvise creatively like humans?

It exhibits advanced generative capabilities using transformer models trained on jazz, baroque, and global improvisational traditions. While its outputs are structurally innovative, human listeners still differentiate synthetic generation from improvisation rooted in lived experience. The robot excels at combinatorial novelty within defined parameters rather than true stylistic innovation.

2. How does the instrument handle expressive nuances like rubato or vibrato variations?

Using score-analysis AI that detects Italian expression markings, it applies stylistic templates learned from performance data. The 2024 model introduced "contextual sensitivity"—delaying cadences during melancholic passages or intensifying vibrato during dramatic climaxes based on harmonic tension algorithms. Its rubato precision actually exposes inconsistencies in human ensemble playing.

3. Will this technology replace orchestral musicians?

Current implementations suggest augmentation rather than replacement. Professional ensembles utilize Cadière Robotic Instruments for repertoire requiring superhuman techniques (contemporary microtonal works) or inaccessibly rare instruments. More frequently, they serve as programmable section leaders enabling precise intonation. The greatest value emerges in interactive composition tools and accessibility applications.

Beyond the Horizon: Music's AI-Integrated Future

The next evolution involves networked intelligence. Preliminary tests with multiple synchronized Cadière Robotic Instruments reveal emergent ensemble behaviors: distributed harmony generation where units autonomously negotiate voicing space, creating self-organizing chamber groups. Looking ahead, neuromorphic chips could enable real-time emotional biofeedback interpretation, adjusting performance parameters based on audience biometrics.

As we stand at this inflection point, the Cadière Robotic Instrument doesn't diminish musical humanity—it mirrors our creative potential. By automating technical constraints, it redirects human focus toward emotional intentionality and formal innovation. Like the piano in the 18th century or synthesizers in the 20th, this technology expands music's expressive language while inviting critical questions: How much precision can art tolerate before losing humanity? And what emerges when machine capabilities become musical collaborators rather than mere tools? The answers compose the next movement of our sonic evolution.