The Cost-Efficient AI Model Deployment Revolution

While Silicon Valley burns billions on GPU clusters, DeepSeek-R1's 5-step cost-slashing formula is turning startups into AI powerhouses overnight:

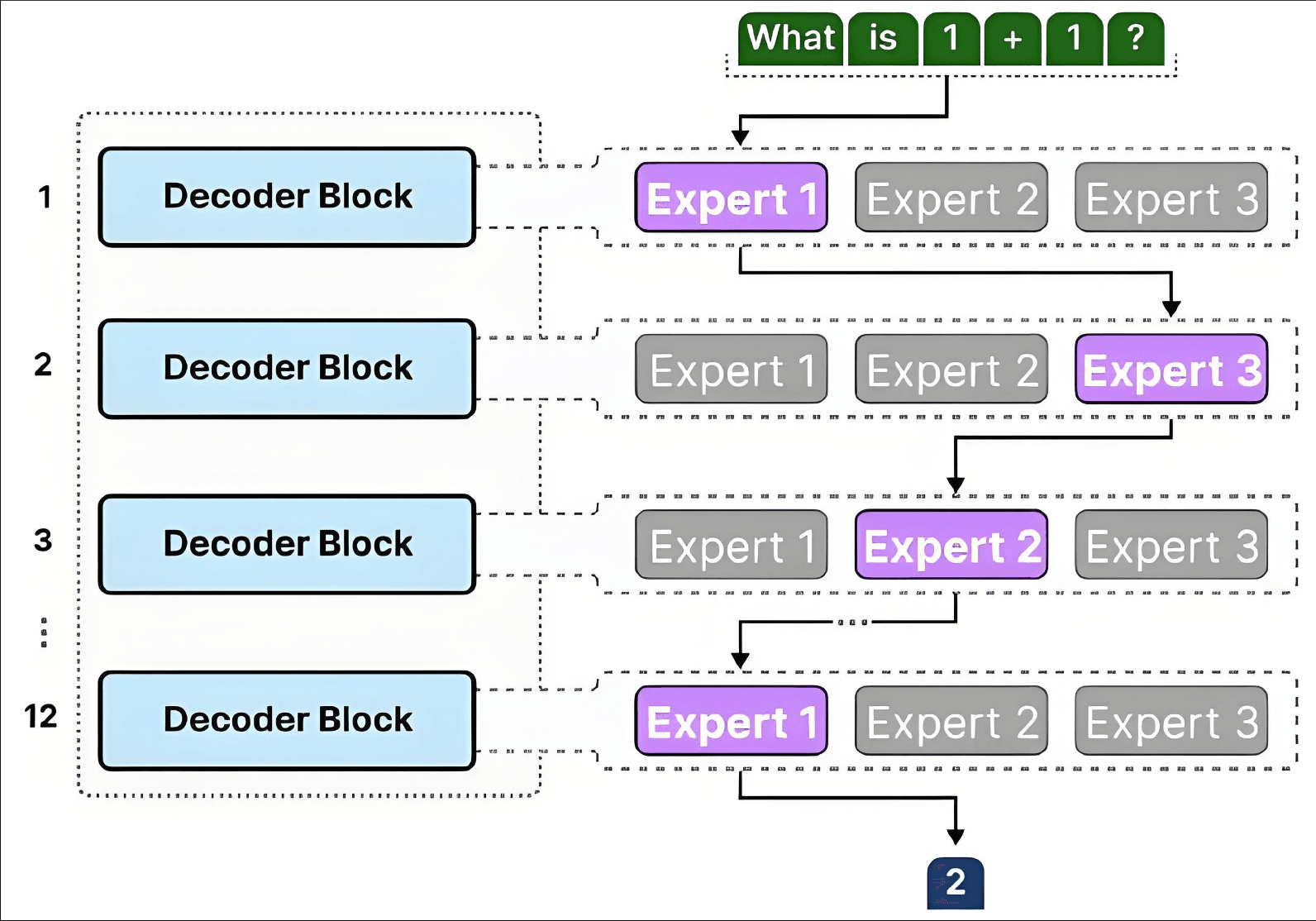

1. MoE Architecture: The 90% Cost-Cut Blueprint

DeepSeek-R1's secret weapon? Its Mixture-of-Experts (MoE) design that activates only 370B of 671B parameters per task. Imagine having 256 specialized consultants but only paying the ones you need!

| Metric | DeepSeek-R1 | OpenAI o1 |

|---|---|---|

| Cost/Million Tokens | $0.55 | $55 |

| Training Budget | $5.58M | $100M+ |

| Daily Profit Margin | 545% | -15% |

2. Your 5-Step Playbook for Ultra-Low Cost AI Deployment

Ready to slash your AI budget? Here's the battle-tested roadmap:

Step 1: Model Selection Alchemy ??

Choose between R1's distilled variants (4B-67B params) based on your use case. Pro tip: Start with the 4B model for customer service – it handles 92% of queries at 1/10th GPU load.

Step 2: Data Diet Engineering ??

Upload 50+ cold-start samples to teach R1 your industry lingo. The AI's Chain-of-Thought training then extrapolates patterns – needing 100x less data than traditional models.

Step 3: Dynamic Load Balancing ??

Implement R1's 3-layer load balancer (Prefill/Decode/Expert-Parallel) to keep GPU usage fluctuations under 5%. Night shift hack: Redirect 30% nodes to training during off-peak hours.

Step 4: Profit-Boosting API Wizardry ??

IF (user_query contains 'price comparison') THEN activate MoE-Expert#23 (discount psychology) ELSE default to MoE-Expert#7 (product specs)

Step 5: Continuous Cost Monitoring ??

Use R1's built-in Tidal Analytics Dashboard to track:

?? Real-time cost/token across regions

? GPU utilization vs. response time ratios

?? Auto-scaling recommendations