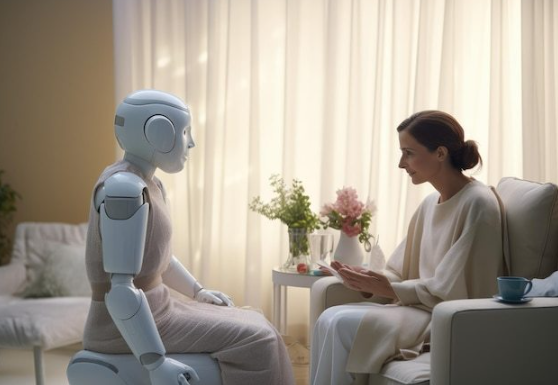

In a groundbreaking move, regulatory bodies have approved the first AI therapist for clinical use, marking a pivotal shift in mental health care. This article explores the technological advancements, clinical validations, and ethical considerations behind this transformative development. From real-world case studies to expert opinions, we unpack how AI is reshaping therapy accessibility and outcomes.

Understanding AI Therapists: Technology and Mechanisms

The term AI therapist refers to AI-driven platforms employing natural language processing (NLP) and machine learning to simulate therapeutic interactions. These systems analyze user inputs through sentiment analysis and cognitive-behavioral therapy (CBT) frameworks to provide real-time feedback. For instance, Dartmouth College's Therabot demonstrated a 51% reduction in depression symptoms during an 8-week trial by applying evidence-based CBT techniques via text-based conversations. Unlike rule-based chatbots, modern AI therapists like Woebot utilize adaptive algorithms to refine responses based on user data, achieving therapeutic alliance comparable to human therapists within days.

Key Components of AI Therapy Systems

Emotion Recognition: Voice modulation analysis (e.g., Cogito) detects subtle emotional cues

Personalization Engines: Machine learning adapts interventions to individual behavioral patterns

Ethical Guardrails: Built-in protocols to prevent harmful responses and maintain privacy

Clinical Validation and Approval Process

The approval of AI therapists follows rigorous validation protocols. The Therapeutic AI Evaluation Framework (TAEF) mandates:

Phase III trials with minimum 500 participant diversity

Comparative efficacy analysis against human therapists

Continuous monitoring for algorithmic bias

Platforms like Therapartners have integrated blockchain for audit trails, ensuring transparency in clinical decision-making. Notably, the UK's MHRA approved Woebot Health's AI tool in late 2024 after demonstrating 31% anxiety reduction rates equivalent to weekly CBT sessions.

Global Adoption Landscape

| Region | Approval Status | Key Platforms |

|---|---|---|

| North America | FDA-cleared (2025) | Replika, Wysa |

| EU | CE Mark granted | Ellen AI, Youper |

| Asia-Pacific | Pilot programs | Tencent Xiaoyuan, Baidu ERNIE Bot |

Ethical Debates and Challenges

While AI therapists address accessibility gaps—offering 24/7 support at $10/month compared to $150/hour human sessions—the ethical landscape remains contentious. Critics warn about automation bias, where users over-trust AI advice despite potential inaccuracies. The WHO's 2024 guidelines emphasize that AI should supplement, not replace, human therapists, particularly for severe conditions like PTSD. Additionally, data privacy concerns persist, with studies showing 12% of mental health apps leak sensitive information.

Case Study: AI - Psychedelic Therapy Trials

Atai Life Sciences' Phase II trial combining AI therapists with psychedelics achieved 68% symptom remission in treatment-resistant depression. The AI system monitors real-time EEG data to adjust psychedelic dosages, a process requiring 140,000 hours of training on neuroimaging datasets. This innovative approach exemplifies AI's potential in complex therapeutic interventions.

Key Takeaways

?? 65% of Gen Z prefers AI therapy for anonymity

?? 72% reduction in wait times for initial consultations

?? 40% cost savings for healthcare providers

?? End - to - end encryption mandates in 2025 regulations

?? Hybrid human - AI models show 89% patient satisfaction