Imagine a pianist flawlessly executing a complex concerto for hours without fatigue, or a guitarist performing inhumanly rapid solos with perfect pitch. This isn't science fiction; it's the reality ushered in by Musical Instrument Robots. These remarkable machines, blending precision mechanics, sophisticated robotics, and increasingly artificial intelligence, are far more than mere novelty acts. They represent a seismic shift in how music is composed, performed, practiced, and even learned, blurring the lines between technology and artistry. Forget simple player pianos; modern Musical Instrument Robots are capable of nuanced expression, collaborative creation, and pioneering entirely new sonic landscapes, fundamentally challenging our definition of a musician.

Beyond the Player Piano: What Exactly is a Musical Instrument Robot?

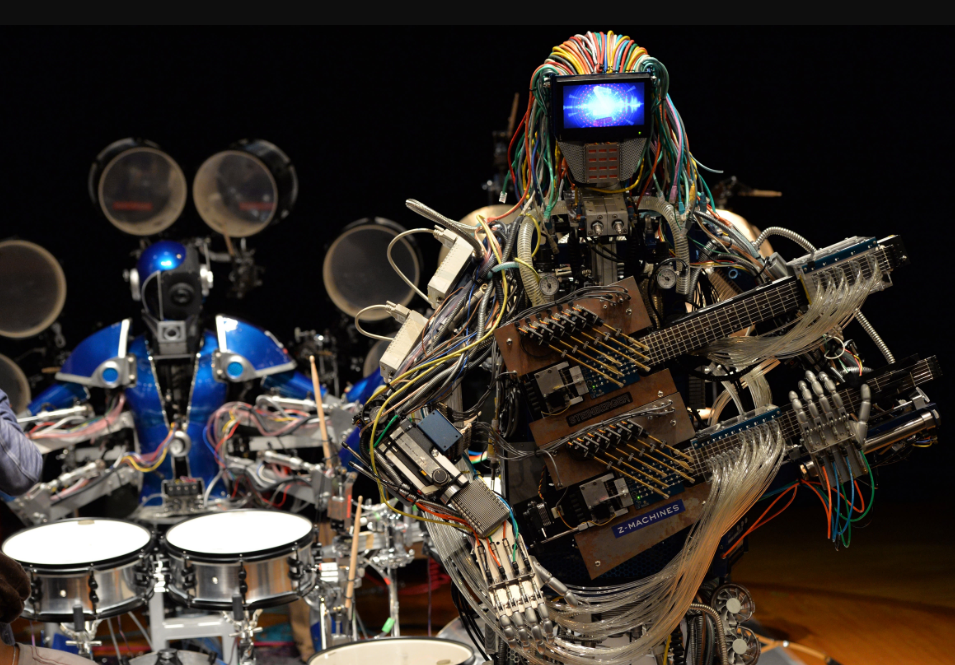

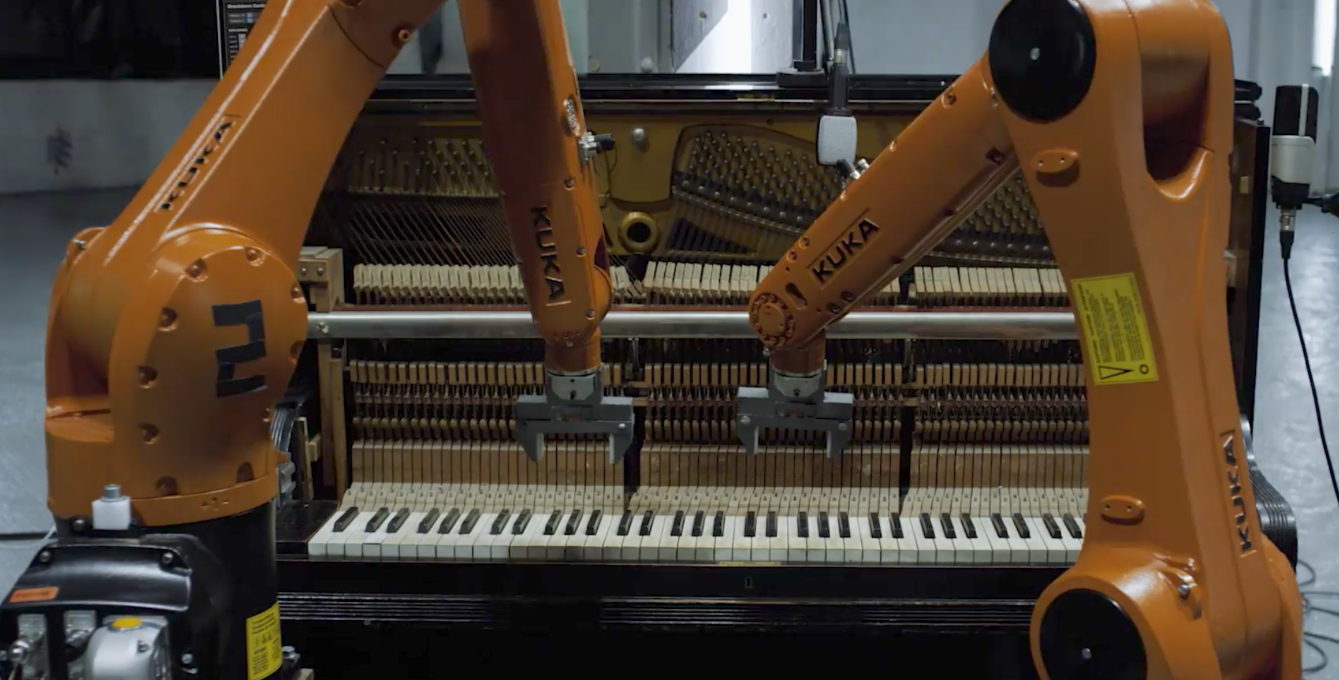

A Musical Instrument Robot is a robotic system engineered specifically to interact with a traditional acoustic or electronic musical instrument to produce sound. Unlike digital synthesizers or software that generate sound electronically, these robots physically manipulate the instrument – striking piano keys, plucking guitar strings, hitting drum surfaces, or bowing violin strings. This physical interaction preserves the authentic acoustic properties and rich tonal complexities inherent in the original instrument. The core components include actuators (motors, solenoids) for movement, sensors for feedback (like force or position), a sophisticated control system (often involving AI), and the crucial mechanical interface that allows the robot to effectively "play" the specific instrument.

The Engine Room: Core Technologies Powering Musical Instrument Robot Innovation

Several cutting-edge technologies converge to bring these robotic musicians to life:

Precision Mechatronics & Actuation

Miniaturized, high-speed, high-torque actuators provide the physical force needed. Solenoids create rapid, percussive strikes (drums, pianos), while servo or stepper motors enable smooth, continuous motion for bowing, sliding, or precise plucking. The design of end-effectors (the 'hands' or 'mallets' of the robot) is critical for sound quality and instrument safety.

Advanced Control Systems & AI

This is the brain. While pre-programmed sequences were common, modern control increasingly leverages Machine Learning (ML) and AI for:

Performance Nuance: Learning subtle dynamics, vibrato techniques, and human-like expressive variations beyond simple MIDI reproduction.

Real-time Adaptation: AI algorithms can analyze acoustic feedback from the instrument or environment (like a piano's resonance) and adjust playing parameters in real-time for optimal sound.

Generative Composition: AI can compose original musical phrases for the robot to play, based on learned styles or entirely novel parameters.

Unheard Melodies: Unique Capabilities Setting Musical Instrument Robots Apart

These robots transcend human limitations and open new creative avenues:

Superhuman Dexterity and Unwavering Consistency

Musical Instrument Robots achieve speeds and rhythmic precision impossible for humans. They can execute complex polyrhythms or microtonal sequences flawlessly for indefinite durations, making them ideal for highly intricate compositions and rigorous music production needs.

Radically New Playing Techniques & Sonic Palettes

Unbound by human anatomy, robots can employ physically impossible techniques – striking a single piano key with multiple hammers simultaneously at different velocities/angles, bowing a single violin string at twenty precise points, or generating hyper-fast tremolos on a guitar. This creates entirely unique timbres and textures previously inaccessible. Projects like HALION Sonic explore AI-driven sound generation specifically designed for robotic articulation, pushing synthesis into new physical domains.

Endurance & Accessibility

Robots can perform tirelessly in installations, provide consistent accompaniment for practice (e.g., perfectly timed drum tracks), or make complex physical musical experiences accessible in contexts requiring 24/7 operation or where placing a human musician is impractical.

Beyond the Concert Hall: Pioneering Applications of Musical Instrument Robots

The impact extends far beyond mere performance novelty:

Revolutionizing Music Education

Imagine a robot perfectly demonstrating a challenging passage on a student's *actual* instrument at variable speeds, or providing hyper-accurate rhythmic backing. Companies like Yamaha are actively developing robotic systems for piano pedagogy, offering immediate, tangible physical demonstration. Similarly, AI-powered Musical Instrument Robots can adapt to a student's tempo, offering personalized practice experiences. This complements broader AI-driven learning tools, such as How Musical Dancing Robot Toys Are Using AI to Teach Your Kids Real Magic!, showcasing the diverse ways robotics and AI are entering education.

Scientific Research & Acoustic Preservation

Researchers use Musical Instrument Robots to rigorously test acoustic theories about instrument construction, sound production, and resonance by executing perfectly repeatable experiments. They are also crucial in documenting rare playing techniques or the precise sonic characteristics of priceless historical instruments without subjecting them to excessive human wear.

Therapeutic Applications & Sensory Experiences

In therapeutic settings, interactive robotic instruments offer novel ways for individuals with physical or cognitive challenges to create and interact with music. Large-scale installations using coordinated robots create immersive, multi-sensory environments blending light and kinetic sound.

Ethical Resonance: Creativity, Authenticity, and The Future Musician

The rise of Musical Instrument Robots sparks profound debates:

Can a Robot Be a True Artist?

Does creativity require consciousness? While currently extensions of human programming (or AI training), the line blurs as AI becomes more generative. Are compositions created by an AI driving a Instrument Robot the "authentic" voice of the machine? This challenges traditional notions of authorship and artistic intent.

Augmentation vs. Replacement?

The goal is rarely wholesale replacement of human musicians. Instead, robots serve as powerful tools: collaborators pushing human creativity, tireless session players, educators, and explorers of sonic frontiers humans cannot physically reach. They augment the musical ecosystem.

The Cutting Edge: Neural Synthesis & Empathic AI

The future lies in deeper integration of AI not just for control, but for *understanding*:

Neural Sound Generation: Moving beyond physical modeling, AI trained on vast libraries of acoustic instrument sounds could predict and generate nuanced timbres specifically tailored for robotic interaction, creating sounds no traditional instrument could produce.

Emotionally Responsive Performance: AI analyzing audience biometrics (facial expression, heart rate) could allow a Musical Instrument Robot to subtly adapt its playing style, tempo, or dynamics to influence or reflect the perceived emotional atmosphere of a room in real-time during live performance.

Advanced Human-Robot Improvisation: AI systems capable of deep listening and predictive modeling could engage in genuinely interactive and dynamic musical dialogues with human performers, reacting and developing ideas organically.

Frequently Asked Questions (FAQs)

What is the difference between a Musical Instrument Robot and a digital synthesizer or sampler?

A synthesizer or sampler electronically generates or reproduces sounds digitally. A Musical Instrument Robot physically interacts with a *real*, often acoustic, instrument (piano, guitar, drum, violin, etc.) using mechanical actuators to produce sound the same way a human would, preserving the authentic acoustic resonance and physical nuances of the instrument itself. It's about mechanical performance on existing instruments.

Can a Musical Instrument Robot learn to play on its own?

Primarily, no. They require programming, pre-recorded MIDI data, or AI-driven instructions. However, sophisticated AI-powered systems can analyze music, learn patterns and styles, and even generate novel musical ideas *to play*, giving the *impression* of autonomous learning and creation. The physical execution is always programmed or AI-directed.

Are Musical Instrument Robots expensive? Who uses them?

Yes, they are typically complex and expensive, often found in research labs, advanced production studios, universities, large-scale art installations, or employed by avant-garde performers and composers. However, costs are gradually decreasing, making smaller-scale versions accessible for educational applications and dedicated musicians exploring new creative avenues.

Will Musical Instrument Robots replace human musicians?

While automation exists, the primary role of these robots is not replacement but augmentation and exploration. They excel in repetitive tasks, superhuman precision, endurance, and discovering new sonic possibilities inaccessible to humans. They serve as powerful tools for composers, collaborators for performers, educators, and researchers. The human element of creative vision, emotional interpretation, and the irreplaceable connection in live performance remains paramount. Robots are unlikely to replace the soul of music.

The Musical Instrument Robot is not merely a high-tech gimmick; it's a revolutionary interface between the digital world and the rich, physical reality of acoustic instruments. By extending human capabilities and exploring sonic realms we could never reach alone, these machines are catalyzing unprecedented creative possibilities. As AI continues to evolve, the collaboration between human intention and robotic execution will likely redefine composition, performance, and the very tools we use to express ourselves musically. The orchestra of the future may well include both carbon and silicon performers, each bringing unique strengths to the stage. The melody of tomorrow is being composed today, one robotic note at a time.