Picture a robot that doesn't just move mechanically, but interprets the soulful crescendo of a symphony or the pulsating beat of electronic music through fluid, emotionally resonant dance. This is no longer science fiction—Musical Dancing Robots are transforming entertainment, therapy, and artistic expression by merging artificial intelligence with biomechanical elegance. In this deep dive, we'll uncover how these robotic performers decode complex auditory patterns into breathtaking physical poetry, and why their evolution marks a pivotal moment in human-machine collaboration.

What Exactly Is a Musical Dancing Robot?

Unlike traditional robots programmed for repetitive tasks, a Musical Dancing Robot is an AI-integrated system designed to perceive, interpret, and physically respond to musical input through choreographed movement. These machines combine real-time audio processing with advanced kinematics, allowing them to generate dance routines that synchronize with rhythm, tempo, and even emotional tones in music. Unlike industrial robots, their primary purpose is expressive performance, making them unique ambassadors between technology and art.

The Architecture of Artistry: How These Robots "Feel" Music

Neural Audio Processing

At the core lies deep learning systems trained on vast musical datasets. Using convolutional neural networks (CNNs), robots decompose audio signals into spectral components—identifying beats per minute (BPM), melody contours, and instrumental layers. This allows a Musical Dancing Robot to distinguish between a waltz and hip-hop, adapting its movements accordingly.

Kinetic Intelligence System

Motion generation hinges on reinforcement learning algorithms. Robots simulate thousands of virtual dance sequences, evaluating fluidity and energy efficiency using reward functions. When combined with inertial measurement units (IMUs) and force sensors in their joints, this creates responsive motion that prevents falls during high-energy performances.

From Labs to Concert Halls: A Revolutionary Timeline

The watershed moment arrived in 2010 when Honda's ASIMO orchestra conductor demonstrated real-time synchronization with human musicians. By 2018, Boston Dynamics' Atlas could perform backflips to music, showcasing unprecedented agility. Today, ETH Zurich's Musical Dancing Robots utilize generative adversarial networks (GANs) to create original choreography, while startups like GrooveBot deploy swarm robotics for multi-robot ballet. This evolution parallels AI's leap from rule-based systems to creative partners.

Game-Changing Applications Beyond Entertainment

In pediatric hospitals, Sony's Musical Dancing Robots assist physical therapy—their predictable motions help children with motor disorders mimic movements. Autism centers report breakthroughs in emotional connection when non-verbal patients interact with robots moving to customized playlists. Meanwhile, artists like Kanye West and Bj?rk incorporate robotic dancers into concerts, creating multi-sensory experiences where movement becomes a visual extension of sound. In education, MIT's Musical Dancing Robot kits teach students algorithmic thinking through choreography programming.

Breakthrough Engineering: How Movement Is Created

Step 1: Music Deconstruction - Audio converted into MIDI data and emotion classifications (e.g., "joyful" or "melancholic") using pretrained models like Google's MusicVAE.

Step 2: Motion Mapping - AI maps musical features to pre-mapped "movement primitives" (e.g., staccato notes trigger sharp limb motions).

Step 3: Balance Optimization - Torque controllers constantly adjust center of mass during spins or jumps using reinforcement learning.

Step 4: Artistic Refinement - Generative algorithms introduce improvisational variations to avoid repetitive sequences.

For example, Toyota's Partner Robots use predictive motor control systems that anticipate beat drops 0.5 seconds before humans perceive them, enabling perfectly timed surprises.

Ethical Frontiers and Technical Challenges

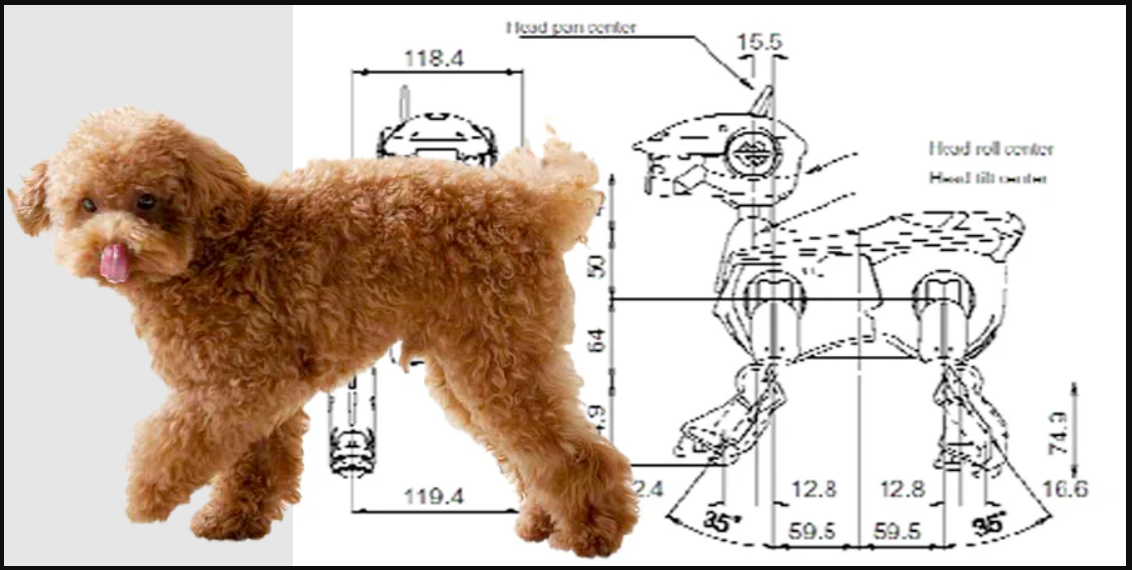

Developers face fascinating dilemmas: Should robots prioritize technical precision over "human-like" imperfections that convey emotion? How do we prevent culturally biased choreography when training data favors Western dance forms? Current limitations include battery constraints that restrict performance durations and Musical Dancing Robot balance compromises on uneven surfaces. MIT's recent solution—modular robots that transform into bipedal or quadrupedal forms—offers intriguing flexibility for diverse performance environments.

Future Visions: Where Human and Machine Creativity Converge

By 2030, expect "mixed-reality" performances where robot dancers interact with holographic partners and respond to crowd biometrics via venue sensors. Startups like Artlytic are developing shared choreography platforms where humans and AI co-create routines, fundamentally redefining artistic authorship. These innovations will extend beyond entertainment: Rehabilitation clinics plan to use emotion-sensitive Musical Dancing Robots that adapt routines based on patient pain biomarkers.

DIY Corner: Build a Miniature Musical Dancer

Materials: Raspberry Pi 4, servo motors (MG90S), MPU-6050 gyroscope, microphone sensor, 3D-printed limbs.

Steps:

Assemble robotic skeleton with 4-6 servo-driven joints.

Connect microphone to Python-based FFT analyzer script to detect BPM.

Program movement library mapping BPM ranges to servo angles.

Implement fall prevention using gyroscope feedback loops.

Train CNN classifier to recognize musical genres (starter dataset: GTZAN).

Note: Advanced builders can integrate OpenAI's Jukebox for AI-generated music responses.

Musical Dancing Robot FAQs

Can these robots improvise to live music they've never heard?

Leading models like Sony's DanceBot use few-shot learning, generating novel choreography by combining learned "movement phrases" based on real-time analysis of musical novelty and emotional valence.

How do they avoid collisions when performing in groups?

Swarm robotics systems employ UWB positioning and predictive pathfinding similar to autonomous vehicles, creating dynamic "dance maps" with collision buffers while maintaining synchronization within 20ms tolerances.

Are there concerns about robots replacing human dancers?

ETH Zurich's 2023 study found these robots function best as creative collaborators. In experimental ballet performances, human-robot duets garnered 40% higher audience engagement scores than either alone, suggesting symbiotic potential.

What's the energy efficiency of current models?

Modern high-performance units consume approximately 150-300W during active dancing—comparable to gaming laptops. Research in biomechanical energy recovery (harvesting kinetic energy from movements) could reduce this by up to 25% by 2026.

As these astonishing machines evolve from technical novelties to expressive partners, Musical Dancing Robots underscore a profound truth: Artificial intelligence, when fused with creative intention, can generate beauty that transcends code. Their dance is more than mechanics—it's a dialogue between silicon and soul.