Ever wonder why some AI tools refuse to answer certain questions or steer conversations in specific ways? The secret lies in the often opaque Cursor AI rules and C.AI rules governing these platforms. While surface functionalities grab headlines, it's the underlying governance frameworks that truly dictate an AI's behavior, ethical boundaries, and ultimate utility. Understanding these differences isn't just technical nitpicking – it's crucial for developers, businesses, and power users aiming to leverage AI safely and effectively. This deep dive reveals the nuanced, often unspoken battle of rule frameworks shaping your interactions.

Beyond the Interface: Demystifying AI Rule Frameworks

AI platforms like Cursor AI and Character.AI (C.AI) present user-friendly interfaces, but the real magic (and control) happens behind the scenes. Governing rules dictate acceptable content, safety protocols, output limitations, and how these systems handle sensitive topics. **Cursor AI Rules**, often operating at the code level for developer tools, differ fundamentally from the conversation-focused C.AI Rules designed for mass consumer interaction. Grasping this core distinction unlocks the platforms' intended use and limitations.

C.AI Rules: Governing the Digital Persona Landscape

Character.AI (C.AI) excels in user-generated conversational agents ("Characters"). Its rules primarily focus on:

Content Moderation: Preventing the generation of illegal, severely harmful, or non-consensual explicit content.

User Safety: Limiting interactions that could promote real-world harm, dangerous misinformation, or severe harassment. Its guidelines focus heavily on filtering outputs.

Persona Management: Enforcing restrictions based on the type of character (e.g., historical figures might have stricter rules).

Public Platform Constraints: Designed for broad accessibility, leading to broad safety filters that can sometimes overly restrict nuanced discussions.

C.AI often relies on sophisticated language model fine-tuning and real-time filtering systems. Enforcement is typically reactive or algorithmically pre-emptive. As explored in detail in Unlock C AI Bot Rules, bypassing these intentionally is complex and discouraged. Transparency on specific rule triggers is limited.

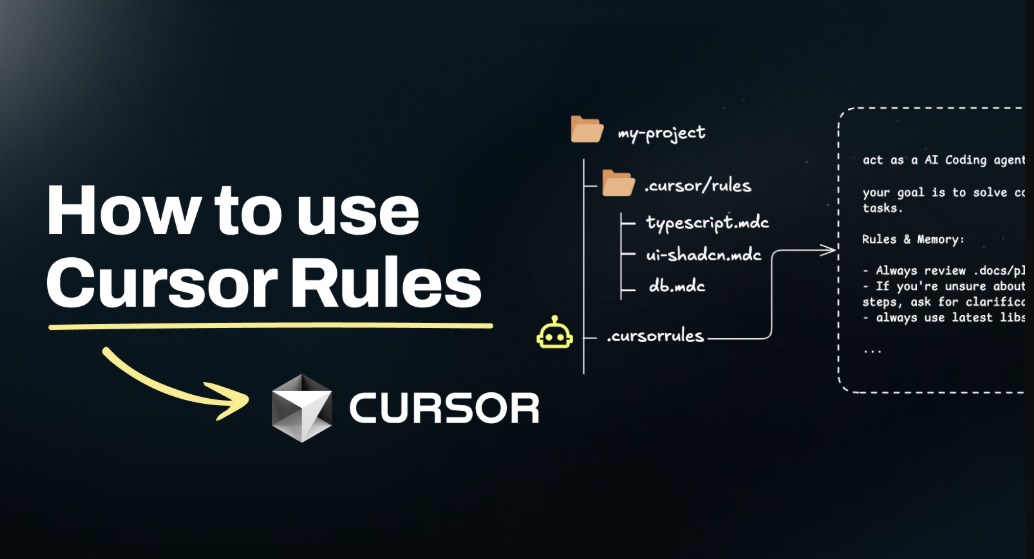

Cursor AI Rules: The Developer-Centric Command Layer

Cursor AI positions itself as an AI-powered code editor/assistant designed to integrate deeply into a developer's workflow. Consequently, Cursor AI Rules manifest differently:

Code Autonomy vs. Guardrails: Focus shifts from chat content moderation to ensuring generated code is functional, contextually relevant, and minimizes risks like suggesting insecure code patterns or introducing vulnerabilities.

Privacy & Security Paramount: Rules heavily emphasize data handling, ensuring code snippets and project context remain private and are not used for unintended model training without consent.

Contextual Understanding Depth: Rules governing how deeply Cursor AI analyzes project files (beyond the current open file) are critical for accurate assistance.

Intelligent Action Constraints: Defining boundaries for autonomous actions Cursor might take (e.g., modifying files, running commands, accessing APIs) based on explicit user instructions and established permissions.

Knowledge Cutoff Management: Rules defining how Cursor AI handles outdated information versus current best practices, often involving code repository indexing.

Cursor AI Rules are often more technical and configurable. They might involve setting project-level privacy flags, controlling file access scope, defining allowed autonomous actions, and potentially integrating custom security linters. Implementation combines model instruction prompting with system-level constraints and user configuration.

Head-to-Head: Decoding the Rule Differences

| Aspect | C.AI Rules | Cursor AI Rules |

|---|---|---|

| Primary Purpose | Prevent harmful/dangerous conversational outputs; Moderate UGC personas. | Ensure code security/privacy; Guide relevant, safe code generation; Govern tool actions. |

| Core User Concern | Conversational safety, bias, filter accuracy ("false positives"). | Intellectual Property protection, preventing insecure code, managing tool autonomy. |

| Rule Implementation Layer | Primarily applied during/after response generation (filtering/fine-tuning). | Integrated into prompt context, tool permissions, and the underlying model architecture. |

| Transparency & User Control | Generally low; Opaque filters; Limited user adjustment. | Higher (for developers); Project settings, privacy flags, permissions configuration often available. |

| Consequence of Rule Violation (User Side) | Warning, interaction block, suspension. | Warning, action block, insecure code flagged, tool feature disabled for task. |

| Key Enforcement Method | Output filtering, model fine-tuning. | Prompt engineering, system-level permissions, IDE integration constraints. |

The Ethics Angle: How Rules Shape Responsible AI

Both platforms embed ethics, but from different lenses. C.AI rules grapple with the ethical minefield of unrestricted conversational AI – preventing impersonation, hate speech, and psychological manipulation. **Cursor AI Rules**, however, focus on software development ethics: preventing the creation of insecure or malicious code, respecting licensing, and safeguarding proprietary code. This fundamental divergence showcases how AI rule frameworks are inherently tied to the application domain. The ethical considerations driving C.AI Rules are publicly debated for their impact on free expression, while Cursor AI Rules concern software integrity and corporate security.

Configurability: Who Holds the Reins?

This is a major practical divergence. C.AI offers users minimal control over its core rule framework; rules are applied universally by the platform. While "Character" creators have *some* influence via persona definitions, they cannot relax fundamental platform safety rules like allowing violent content. Conversely, Cursor AI Rules inherently offer developers greater configurability:

Project-Level Privacy: Defining if code should be sent to the AI model or processed locally.

File Access Permissions: Controlling which files within a project Cursor AI can analyze for context.

Autonomous Actions: Enabling/disabling features like auto-run or file modification.

Model Selection & Parameters: Potentially allowing the use of models with different inherent rule sets.

This configurable nature aligns with Cursor AI's audience – professionals who need to enforce their organization's specific security and privacy standards.

Under the Hood: How Rules Are Technically Enforced

C.AI Enforcement Mechanics

C.AI primarily uses a combination of:

Pre-training Fine-tuning: Training the base model on datasets curated to avoid harmful outputs.

Real-time Filtering: Employing secondary classifiers or rules engines that scan generated responses before they appear, blocking or rewriting non-compliant text.

User Flagging & Retroactive Action: Relying on community reporting, followed by human moderation or model retraining.

Note: C.AI's specific implementation details are proprietary and constantly evolving, but the pattern aligns with major conversational AI platforms.

Cursor AI Rules Enforcement Mechanics

Cursor AI leverages its integration into the developer environment:

Contextual Prompt Engineering: Crucially, every request sent to the underlying AI model (like GPT-4) includes specific instructions derived from project Cursor AI rules – e.g., "Do not suggest code known to have security vulnerabilities like SQL injection", "Respect licensing information within referenced files".

System-Level Constraints: The Cursor IDE actively prevents actions violating explicit permissions. E.g., blocking file writes if the autonomous edit feature is disabled.

Local Processing Options: Offering modes where sensitive code isn't transmitted externally, inherently enforcing privacy rules.

Code Analysis Integration: Potentially pairing AI suggestions with linters and security scanners that flag outputs violating project-specific quality or security rules.

FAQ: Unpacking Cursor AI Rules & C.AI Governance

Q1: Can I completely bypass C.AI rules?

A: It is highly discouraged and technically difficult. C.AI's filtering is deeply ingrained. While subtle rephrasing might occasionally bypass filters, intentional circumvention violates terms and often fails. See deep dives on these attempts: Unlock C AI Bot Rules? (Ethical Focus).

Q2: Do Cursor AI Rules mean my proprietary code is safe?

A> Cursor AI Rules and settings provide significant *tools* for safety (especially privacy modes & permissions). However, ultimate security depends on user configuration (enabling privacy features), trusting Cursor AI's privacy practices, understanding the limitations of local processing, and potentially supplementing with organizational policies. Configure settings diligently!

Q3: Which platform's rules are stricter?

A> "Strictness" depends on context. C.AI rules are broader for public safety, potentially blocking more subjective conversational topics. Cursor AI Rules are highly specific about security, privacy, and code integrity – potentially allowing freer *conceptual* discussion of code but strictly preventing dangerous outputs. Neither is universally "stricter"; their domains differ.

Q4: Are these rules driven by law or ethics?

A> Both. Platform rules aim to comply with regulations (like GDPR/HIPAA implications for data, copyright laws) and avoid illegal content. However, significant aspects stem from the platform's *ethical* choices on responsible AI use, addressing potential societal harm or misuse, even beyond legal minimums.

Conclusion: Choosing Your AI Governed by the Right Rules

The silent battle between **Cursor AI Rules and C.AI Rules** highlights a fundamental truth: **AI governance is never one-size-fits-all.** Your choice of platform is inherently a choice of rule framework. For engaging in open-ended, creative dialogue with digital personas, navigating C.AI's conversational guardrails is key. For developers seeking an intelligent pair programmer that respects their security perimeter, intellectual property, and workflow boundaries, understanding and configuring Cursor AI Rules is paramount. Recognizing these distinct rule sets – one sculpting dialogue, the other shaping code – empowers you to align your AI tool choice with your core priorities, be it creative freedom or secure productivity. Don't just interact with the AI; understand the rules that shape its interactions with you.