Imagine a future where symphonies aren't just performed flawlessly but are *composed* by algorithms, where tireless mechanical musicians collaborate with humans, and where the boundaries of musical expression are continuously pushed by non-biological performers. This isn't science fiction; it's the reality unfolding with the rapid advancement of Instrument Playing Robots. These remarkable machines, blending cutting-edge robotics, sophisticated artificial intelligence, and deep musical understanding, are not merely novelties. They represent a fundamental shift in how music is created, performed, and even conceived. Instrument Playing Robots move beyond simple playback – they interpret, adapt, and increasingly, generate music with nuance and expressiveness previously thought exclusive to human musicians. Join us as we explore the fascinating world of these robotic performers and uncover how they are tackling the most elusive challenge: conveying genuine musical emotion.

The Anatomy of an Instrument Playing Robot: Beyond the Metal Fingers

An Instrument Playing Robot isn't just a robot arm plonked near a piano. It's a complex, integrated system designed for a specific, highly dexterous task: interacting with a physical instrument to produce sound. Creating one involves solving unique challenges:

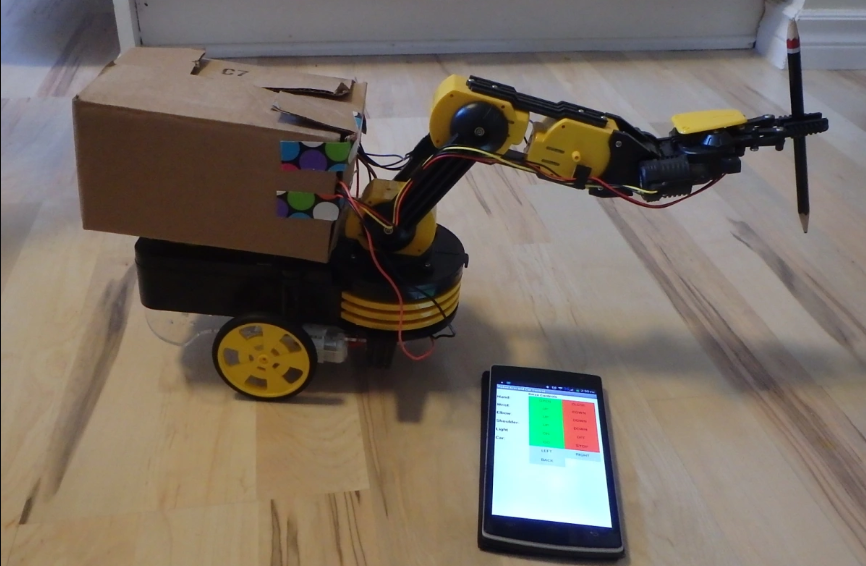

Sensorimotor Mastery: Replicating the fine motor skills, touch, and timing required for instruments like violin (bowing pressure, finger placement) or saxophone (embouchure simulation) demands incredibly precise actuators and force feedback sensors. Robots like those discussed in our exploration of AI-powered musical machines showcase this intricate engineering.

The Instrument Interface: Robotic mechanisms must physically interface with the instrument – hitting piano keys with the correct velocity, strumming guitar strings accurately, manipulating valves and keys on wind instruments, or striking a drum with varying intensity. This often requires custom end-effectors designed for specific instruments.

Core AI Processing: Beneath the physical interface lies the machine intelligence. This involves sophisticated software layers:

MIDI Interpretation/Score Reading: Converting digital scores or MIDI data into executable commands for the robot.

Performance Modeling: Algorithms that don't just play notes, but understand musical phrasing, dynamics (loudness variations), articulation (how notes are attacked and released), and rhythm. This layer injects the "human-like" variation.

Sensor Feedback Integration (Real-time AI): Processing data from force, pressure, audio, and visual sensors to adapt the playing instantly. Did the note sound sharp? Adjust finger position. Is the bow slipping? Apply corrective torque.

This integration of high-precision hardware and adaptive, musical intelligence defines a true Instrument Playing Robot.

Decoding the Score: How AI Interprets Music for Machines

Translating the abstract language of music – filled with emotion, context, and subtlety – into instructions a machine can physically execute is arguably the most fascinating challenge. How do Instrument Playing Robots "understand" what to play and *how* to play it?

The Learning Process: Many advanced Instrument Playing Robots leverage Machine Learning (ML) techniques:

Data Ingestion: They ingest massive datasets of musical scores, often annotated with performance nuances (e.g., "crescendo here," "staccato here," "rubato tempo"). Audio recordings of expert human performances are also analyzed.

Feature Extraction: AI models break down the music into identifiable components: pitch sequences, rhythmic patterns, harmonic structures, dynamic markings, and expressive instructions.

Performance Style Modeling: Using techniques like Deep Learning, the system learns correlations between the notated score, expressive markings, and the resulting sonic output. It learns, for instance, that a "fortissimo" marking on a particular chord progression for a trumpet requires a specific combination of air pressure and lip tension within its mechanical system, creating a specific powerful timbre. Similarly, it learns the subtle timing variations that define a "ritardando" (slowing down) at the end of a phrase.

Generative Potential: This understanding can be used generatively. Once trained on vast corpora, some systems can compose original pieces in specific styles or improvise coherently based on learned rules and patterns. This moves robots beyond simple mimicry.

This level of musical interpretation allows Instrument Playing Robots to move beyond rote playback towards delivering performances imbued with learned expressive characteristics.

Beyond Imitation: When Instrument Playing Robots Find Their Voice

While perfectly mimicking human virtuosos is impressive, the most groundbreaking potential lies in robots developing their *own* musical identity and capabilities. This transcends imitation and ventures into true musical agency:

Unique Sonic Textures: Robots aren't limited by human biology. A piano-playing robot could strike keys with velocities or combinations impossible for ten human fingers, creating entirely novel textures and rhythms. Wind instrument robots could sustain notes indefinitely or execute microtonal shifts with perfect precision.

AI-Driven Composition & Improvisation: Robots like Georgia Tech's *Shimon* (playing marimba) or Sony's experimental systems don't just play pre-written scores. Using advanced AI models trained on vast musical datasets, they can improvise complex melodies and harmonies in real-time or generate entirely original compositions. Our exploration of AI shattering music's glass ceiling delves deeper into this creative explosion. Shimon, for example, uses neural networks trained on over 5,000 complete songs and over 2 million motifs, riffs, and melodies across genres to generate novel ideas.

Hybrid Human-Robot Ensembles: The most compelling future involves collaboration. Instrument Playing Robots can act as responsive bandmates, reacting to human improvisation with generated complementary lines, maintaining perfect timekeeping, or adapting their playing style dynamically based on audio input from human performers. Projects like those from Princeton University demonstrate real-time adaptive play between humans and robotic pianists.

This evolution positions the Instrument Playing Robot not just as a tool, but as a distinct musical entity capable of novel creative output and profound interactive experiences.

Machine vs. Maestro: Exploring the Emotional Frontier

The most persistent debate surrounding Instrument Playing Robots centers on emotional expression: Can a machine genuinely convey feeling through music? While robots excel at precision, consistency, and even novel techniques, replicating the depth of human emotion remains the ultimate frontier. Current approaches involve:

Parameterizing Emotion: AI systems map abstract concepts like "joy," "sadness," or "anger" to sets of musical parameters: tempo (faster/slower), dynamics (louder/softer), articulation (sharper/smoother), harmonic choices (major/minor, dissonance), and timbral changes. By algorithmically varying these parameters based on an "emotion tag," robots can simulate expressive performances aligned with intended feelings.

Bio-Signal Integration (Emerging Frontier): Experimental projects explore connecting robots directly to human bio-signals. Imagine a robotic string quartet modulating its vibrato and phrasing in real-time based on the measured heart rate or galvanic skin response of a listener or conductor – creating a biofeedback loop aimed at heightening emotional resonance.

The Audience Perception Factor: Ultimately, emotional expression exists in the ear and mind of the beholder. Studies suggest that listeners *can* perceive and report emotional content in music played by robots, particularly when informed of the intended emotion. While different, this perceived emotion is valid and demonstrates the robot's communicative power, even if its origins are computational rather than introspective.

While debates about "true" emotion may persist, Instrument Playing Robots are demonstrably becoming powerful conveyors of musical expression designed to evoke specific human responses.

The Future Symphony: Where Instrument Playing Robots Take Us

The trajectory of Instrument Playing Robots points towards increasingly sophisticated, accessible, and integrated musical experiences:

Democratizing Performance & Composition: More affordable and compact robotic systems could make complex instrumentation accessible to schools, community centers, and individual artists without requiring virtuoso players for every part, enabling the creation of intricate music previously beyond their reach.

Immersive & Personalized Performances: Robots, combined with spatial audio and AI, could create dynamic, responsive environments where music adapts uniquely to individual listeners or evolves based on audience reaction in real-time during a live performance.

Archiving & Resurrecting Styles: Detailed robotic performances could preserve the playing styles of legendary musicians with unprecedented accuracy, allowing future generations to experience interpretations otherwise lost to time.

Fundamental Redefinition of Music: The unique capabilities of robots – superhuman speed, inhuman endurance, perfect synchronization across vast numbers, and AI-generated novelty – will inevitably push musical composition and sound design in radical new directions, creating sonic landscapes impossible for purely human ensembles.

The Instrument Playing Robot stands as a powerful symbol of this future – a bridge between the precision of technology and the enduring human drive for emotional and creative expression through sound.

Frequently Asked Questions (FAQs)

1. Can an Instrument Playing Robot genuinely express emotion in its music?

While robots don't experience emotions like humans, they are becoming adept at conveying *representations* of emotion through music. Using sophisticated AI algorithms, they can manipulate parameters like tempo, dynamics, articulation, and harmony based on specific emotional models ("sad," "joyful," "tense"). Research shows audiences can perceive and connect with this expressively programmed output. The emotion is simulated based on learned patterns from human performances, creating a powerful and, to listeners, often effective communicative effect.

2. How much does a sophisticated Instrument Playing Robot cost?

Costs vary enormously based on complexity, the instrument played, and required AI capabilities. Simple, single-arm systems designed for specific tasks might cost tens of thousands of dollars. Highly sophisticated, multi-axis robots capable of nuanced expressiveness and AI interaction (like research prototypes from universities or Toyota's violin robot) represent multi-million dollar investments due to their custom engineering and advanced AI software. Mass-market musical robots for hobbyists are still emerging but becoming more accessible.

3. Can an Instrument Playing Robot compose original music, or is it limited to playing existing scores?

Absolutely, composition is a rapidly advancing frontier! Robots like *Shimon* (marimba) use AI models trained on vast libraries of existing music to generate novel melodies, harmonies, and rhythms. They can improvise solos or create complete musical pieces. While the original "inspiration" comes from learned patterns, the resulting music is unique and hasn't been played before. Humans often guide or curate the output, but the generative capability within these systems is increasingly sophisticated and autonomous.

4. Will Instrument Playing Robots replace human musicians?

Replacement isn't the likely outcome; transformation and expansion are. Robots excel at precision, endurance, perfect pitch/timing, and realizing AI-generated complexity. Humans bring irreplaceable elements: deep emotional introspection, personal narrative, spontaneity in the moment, and the unique physicality influencing their instrument's sound. The future is collaboration: robots as tireless ensemble members, creative partners generating ideas, or performers capable of realizing music impossible for humans alone. They open new avenues rather than solely occupying existing ones, changing the landscape without erasing the human element.