Have you ever watched in frustration as ChatGPT forgot your conversation history mid-discussion? Or tried to analyze a long document only to find your AI assistant could barely process the first few pages? This "AI amnesia" isn't a design flaw—it's a fundamental limitation in today's large language models. But what if you could give any AI an unforgettable memory with a single line of code?

The C AI Memory Extension revolution is here, and it's transforming artificial intelligence from forgetful tools into context-aware digital partners that remember everything. By breaking through the memory barriers that have constrained AI development, these technologies enable machines to maintain coherent, personalized interactions that evolve with every conversation.

The Memory Crisis in Modern AI

Traditional large language models suffer from a "context window" limitation—typically 128K tokens (about 100,000 Chinese characters) for the most advanced models like GPT-4. Once this threshold is crossed, earlier information gets discarded, causing conversations to lose coherence and context. This bottleneck exists because expanding memory natively would increase computational costs exponentially and require impractical hardware resources .

Why AI Needs Memory Extension Technology

True intelligence requires memory—the ability to learn from past experiences, build knowledge, and apply context. Current AI systems operate with severe memory limitations:

The Human Memory Analogy

Human cognition uses multiple memory systems: short-term memory for immediate tasks, long-term memory for knowledge retention, episodic memory for specific experiences, and semantic memory for factual understanding . Traditional AI lacks this sophisticated architecture, making interactions feel shallow and disjointed.

The Business Impact

For enterprises, AI memory limitations translate into real-world constraints:

Customer service bots that reset context after 10 messages

Analytical tools unable to process full documents or datasets

Personal assistants that can't remember user preferences long-term

Memory Tensor's recent $14M funding round highlights the market's recognition that memory-enhanced AI represents the next competitive frontier in artificial intelligence .

Three Revolutionary Approaches to C AI Memory Extension

1. Supermemory: One-Line Code Infinite Memory

Supermemory's breakthrough approach acts as an "infinite memory plugin" for existing AI models. By inserting itself as a middleware layer, it intercepts and intelligently manages context:

Smart Chunking: Splits conversations into meaningful segments while preserving context

Dynamic Retrieval: Pulls only relevant historical context into the active window

Token Optimization: Reduces token usage by up to 90%, dramatically cutting costs

Implementation is shockingly simple—just change your API endpoint:

Original Code:

const client = new OpenAI({ baseUrl: "https://api.openai.com/v1/" })

Memory-Enhanced Code:

const client = new OpenAI({ baseUrl: "https://api.supermemory.ai/v3/https://api.openai.com/v1/" })

This single-line modification enables endless conversations and document analysis without memory loss . Explore Leading AI Innovations

2. MemoryOS: The AI Memory Operating System

Developed by researchers at Beijing University of Posts and Telecommunications, MemoryOS applies computer operating system principles to AI memory management:

Three-Layer Memory Architecture

? Short-Term: Real-time conversation context

? Mid-Term: Thematic organization of information

? Long-Term: Personalized knowledge repository

In benchmark testing, MemoryOS boosted conversation coherence scores by 49.11% (F1) and 46.18% (BLEU-1) while using 4x fewer LLM calls than alternatives .

3. Hardware-Level Memory Expansion

For AI training rather than conversation, companies like YEESTOR are solving memory constraints at the hardware level. Their AI-MemoryX technology expands single-machine GPU memory capacity from the standard 48-64GB to an astonishing 10TB capacity .

This revolution enables:

Single-machine fine-tuning of 70B+ parameter models

Cost reductions from millions to thousands of dollars

Democratization of large model training for smaller organizations

Real-World Applications: Where C AI Memory Extension Shines

The practical applications of memory-enhanced AI are transforming industries:

Hyper-Personalized Digital Assistants

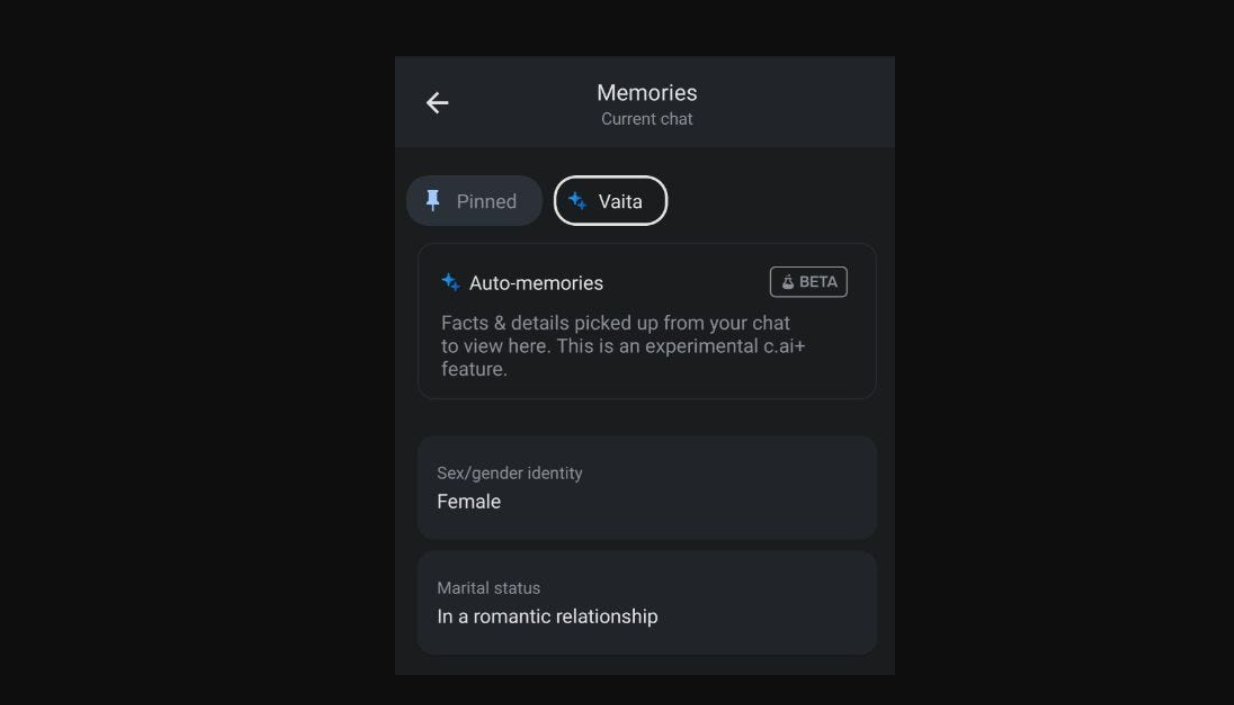

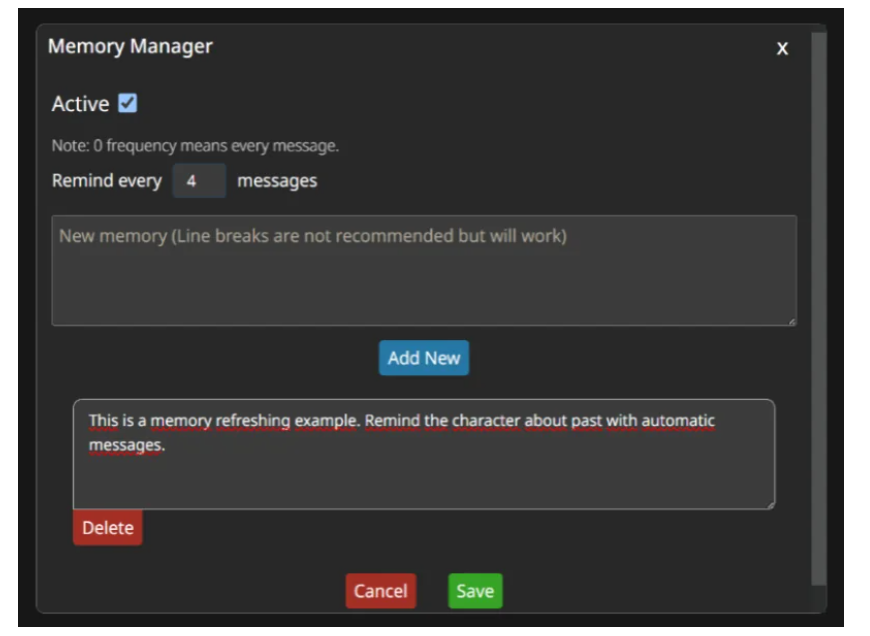

Memory Tensor's AI agents analyze user habits, learning styles, and routines to deliver context-aware reminders and suggestions. Their technology integrates with your digital ecosystem (emails, calendars, notes) to create a truly proactive assistant that evolves with you .

Enterprise Knowledge Management

Companies like MedTech Vendors now use memory extension to search through 500,000+ supplier documents in a single context window. This enables comprehensive analysis of lengthy reports, legal documents, and research papers without manual segmentation .

Creative & Development Workflows

Writers using tools like Flow leverage infinite context to maintain consistent character development and plot arcs across book-length projects. Developers benefit from AI that remembers entire codebases, not just snippets .

Unlock Uncensored AI PotentialFrequently Asked Questions

1. Why can't AI models natively handle unlimited memory?

Three fundamental constraints prevent it: Computational costs that increase quadratically with context length, hardware limitations (especially GPU memory), and positional encoding challenges where models struggle to understand sequences beyond their training length .

2. How does memory extension impact privacy?

Solutions implement multiple safeguards: end-to-end encryption, local data processing options (Supermemory's self-hosting), and granular user controls over what's stored. Memory Tensor specifically emphasizes a "privacy-first architecture" as core to their design .

3. Can memory extension work with any AI model?

Most solutions (like Supermemory) work with any OpenAI-compatible API including GPT-4, Claude 3, and local models. MemoryOS requires integration but supports major frameworks. Hardware solutions require compatible GPU systems .

The Future of Memory-Enhanced AI

We're witnessing a paradigm shift from isolated AI interactions to continuous cognitive partnerships. As Memory Tensor's approach demonstrates, the next frontier isn't just bigger models but smarter memory systems that transform how AI retains and applies knowledge .

Researchers predict this evolution will trigger a "Memory Scaling Law" era—where improvements in memory management deliver cognitive gains comparable to model size increases. MemoryOS creators envision AI that dynamically constructs personalized user information networks, evolving from reactive tools to proactive cognitive partners .

The Memory-Intelligent AI Landscape

By 2026, expect:

Mainstream adoption of memory extension in enterprise AI

Standard "memory management" layers in AI architectures

Specialized AI roles focused on memory optimization and curation

New evaluation metrics for long-term context coherence

The C AI Memory Extension revolution ultimately transforms artificial intelligence from forgetful novices into contextually aware partners that grow with every interaction—ushering in truly personalized AI that understands not just what you're asking today, but everything you've ever discussed.