Understanding xAI Ani Virtual Companion: What Makes It Unique?

The xAI Ani virtual companion has rapidly become a standout in the tech space. Designed for conversation, comfort, and even advice, Ani leverages advanced generative AI to simulate authentic interactions. Yet, with this power comes the responsibility of content moderation. The EU's current investigation focuses on whether Ani's safeguards are robust enough to shield young users from unsuitable or harmful content. This is more than just news; it is a signal to the entire industry. ??Why Content Moderation Is Essential for Virtual Companions

Let us be honest: virtual companions are only as trustworthy as their moderation systems. Here is why content moderation is absolutely vital:Protecting minors: Young users are naturally curious, but they need protection from explicit, manipulative, or predatory content.

Building user trust: A safe environment keeps users engaged and positive about the platform.

Legal compliance: Inadequate moderation can result in heavy fines or outright bans, especially under EU regulations.

Brand reputation: A single incident can damage a brand's image overnight.

How xAI Ani Handles Content Moderation

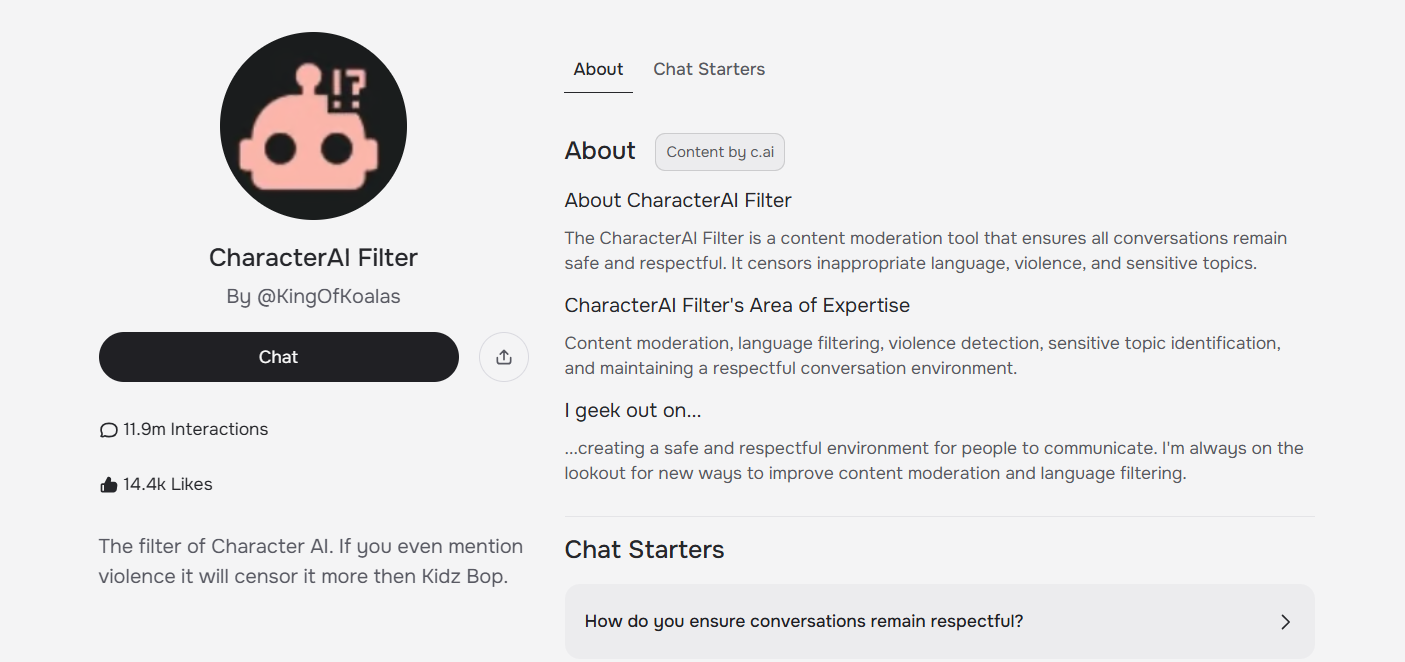

What is the real story behind xAI Ani virtual companion content moderation? Here are the key steps and challenges:1. Real-time Filtering

Ani uses state-of-the-art AI models to scan chats in real time, flagging risky words, phrases, or behaviours. While this is the first defence, it is not infallible—AI can sometimes miss context or sarcasm.2. Human Review

When the system is unsure, flagged conversations go to human moderators. This step captures subtle issues but can slow down response times and is tough to scale.3. Age Verification

Ani requests age confirmation at sign-up and uses algorithms to detect underage users. However, determined youngsters can sometimes bypass these checks.

4. Parental Controls

Parents can set boundaries on chat duration, restrict topics, or block features. This is effective only if parents are proactive and informed.5. User Reporting

Every user can report inappropriate or uncomfortable chats. Reports are reviewed by moderators, but it depends on users taking the initiative to flag issues. None of these steps is perfect alone, which is why the EU is pushing for tighter standards and more transparency.Challenges and Controversies in Moderation

No system is flawless. Here are the main challenges facing xAI Ani virtual companion content moderation now:AI bias: The AI can overreact or overlook subtle harm.

Privacy concerns: Increased moderation often means more data collection, raising privacy debates.

Freedom vs. safety: Balancing open conversation with protection is complex.

International laws: What is acceptable in one region may be illegal in another.

Practical Tips: How Users and Parents Can Stay Safe

Whether you are using Ani or any virtual companion, here are actionable steps:Set up and regularly review parental controls.

Discuss online safety and digital boundaries openly with your children.

Examine the app's privacy and moderation settings—do not just click 'agree'.

Encourage children to report anything uncomfortable or suspicious.

Stay informed about new features or policy updates from the platform.