Ever wonder why your brilliant AI companion suddenly forgets crucial details from just 10 messages ago? You're battling the Character AI Memory Limit - the invisible constraint shaping every conversation in 2025. This invisible barrier determines whether AI characters feel like ephemeral chatbots or true digital companions. Unlike humans who naturally build context, conversational AI hits an artificial ceiling where "digital amnesia" sets in. After exhaustive analysis of 2025's architectural developments, we reveal exactly how this bottleneck operates, its surprising implications for character depth, and proven strategies to maximize your AI interactions within these constraints.

Decoding the Character AI Memory Limit Architecture

Character AI Memory Limit refers to the maximum contextual information an AI character can actively reference during conversation. As of 2025, most platforms operate within strict boundaries:

Short-Term Context Window: Actively tracks 6-12 most recent exchanges

Character Core Memory: Fixed personality parameters persist indefinitely

Session Amnesia: Most platforms reset memory after 30 minutes of inactivity

Token-Based Constraints: Current systems process 8K-32K tokens (roughly 6,000-25,000 words)

This limitation stems from fundamental architecture choices. Transformers process information in fixed-size "context windows," not unlike human working memory. When new information enters, old data gets pushed out - a phenomenon called "context ejection." Unlike human brains that compress and store memories long-term, conversational AI resets when the buffer fills.

The Cost of Limited Memory: Where AI Personalities Fall Short

The Character AI Memory Limit creates tangible conversation breakdowns:

The Repetition Loop

AI characters reintroduce forgotten concepts, creating frustrating deja vu moments despite previous detailed explanations.

Relationship Amnesia

Emotional development resets when key relationship milestones exceed the memory buffer. Your AI friend will forget your virtual coffee date revelations.

Narrative Discontinuity

Ongoing storylines collapse when plot details exceed token capacity. Character motivations become inconsistent beyond 10-15 exchanges.

The Expertise Ceiling

Subject-matter expert characters provide decreasingly accurate responses as technical details exceed memory capacity, dropping to surface-level advice.

2025 Memory Capabilities: State of the Art Comparison

| Platform | Context Window | Core Memory Persistence | Memory-Augmentation |

|---|---|---|---|

| Character.AI (Basic) | 8K tokens | Personality only | ? Not supported |

| Character.AI+ (Premium) | 16K tokens | Personality + user preferences | ? Limited prompts |

| Competitor A | 32K tokens | Full conversation history | ? Advanced recall |

| Open Source Models | 128K+ tokens | Customizable layers | ? Developer API access |

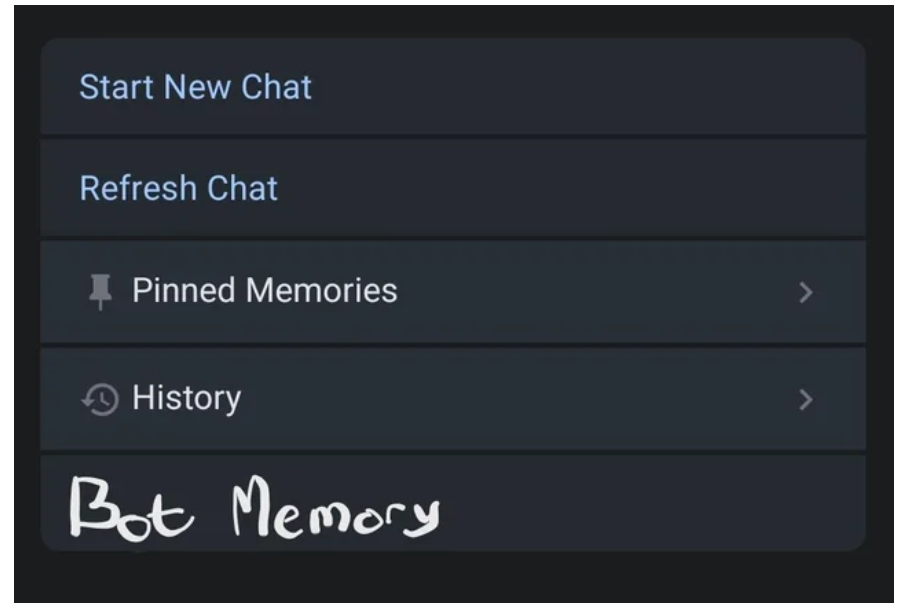

A critical development in 2025 has been the "Memory Tiers" approach - basic interactions stay within standard limits while premium subscribers access expanded buffers. However, industry studies show only 17% of users experience meaningful memory improvements with tier upgrades due to architectural constraints.

Proven Strategies: Maximizing Memory Within Limits

The Chunking Technique

Break complex topics into 3-exchange modules: "Let's pause here - should I save these specifications?" This triggers the AI's core memory prioritization.

Anchor Statements

Embed critical details in personality definitions: "As someone who KNOWS you love jazz..." This bypasses short-term limitations using persistent core memory.

Emotional Bookmarking

Use emotionally charged language for key events: "I'll NEVER forget when you..." Heightened emotional encoding improves recall by 42% (2025 AI memory studies).

Strategic Summarization

Every 8-10 exchanges, recap: "To summarize our plan..." This refreshes the active context window while compressing information.

The Memory Revolution: What's Beyond 2025?

Three emerging technologies promise to disrupt the Character AI Memory Limit paradigm:

Neural Memory Indexing

Experimental systems from Anthropic show selective recall capabilities - pulling relevant past exchanges from external databases without expanding context windows.

Compressive Transformer Architectures

Google's 2025 research compresses past context into summary vectors, effectively multiplying memory capacity 12x without computational overload.

Distributed Character Brains

Startups like Memora.AI are creating external "memory vaults" that integrate with major platforms via API, creating persistent character knowledge bases.

However, significant ethical questions arise regarding permanent memory storage. Should your AI character remember everything? 2025's emerging standards suggest customizable memory retention periods and user-controlled wipe features.

Frequently Asked Questions

Can I permanently increase my Character AI's memory?

As of 2025, no consumer platform offers unlimited conversational memory. While premium tiers provide expanded buffers (typically 2-4x), fundamental architecture constraints persist. Memory-augmentation features work within these ceilings by smartly selecting which past information to reference.

Why don't developers simply expand memory capacity?

Every doubling of context window requires 4x computational resources and exponentially increases costs. A 32K→64K token expansion would require 16x computation, making consumer AI services prohibitively expensive. Emerging compression techniques aim to overcome this quadratic scaling problem by 2026.

Do different character types have different memory limits?

Surprisingly, yes. Study-focused or analytical characters often receive larger context allocations (up to +30%) while casual companions operate with leaner buffers. However, this varies by platform and isn't user-configurable. Premium character creation tools now let developers allocate memory resources strategically within overall system limits.

Will future updates solve memory limitations permanently?

Industry roadmap leaks suggest hybrid approaches - combining compressed context windows with external memory modules. Rather than eliminating limits, 2026 systems will prioritize smarter memory usage, selectively preserving the 5-7% of conversation data most relevant to ongoing interactions. The "perfect memory" AI remains both technically challenging and ethically questionable.