The NYTimes AI journalism scandal has sparked global debates about ethics in automated content generation—especially in sports reporting. From algorithmic bias to copyright infringement, this crisis reveals critical flaws in how AI tools handle sensitive data and creative work. But here's the good news: with the right strategies, journalists and media houses can turn this challenge into an opportunity for innovation. Let's dive into the scandal's core issues and explore practical solutions for ethical AI adoption in sports journalism.

?? The NYTimes AI Scandal: What Really Happened?

In 2025, The New York Times filed a landmark lawsuit against OpenAI, alleging unauthorized use of its proprietary sports journalism content to train AI models. The case exposed shocking details:

- Copyright Violations: ChatGPT replicated entire articles from NYT's Pulitzer Prize-winning investigations into taxi industry exploitation, word-for-word .

- Bing Chat's Unethical Scraping: Microsoft's AI tool pulled paid subscriber-only articles from *NYTimes* partners like *Wirecutter*, bypassing paywalls and harming ad revenue .

- Algorithmic Hallucinations: AI-generated sports recaps included fabricated quotes and inflated stats, misleading readers and damaging brand credibility.

This isn't just a legal battle—it's a wake-up call for the entire industry.

?? Why Sports Reporting Is Ground Zero for AI Ethics

Sports journalism thrives on accuracy, timeliness, and storytelling. Here's why AI's intrusion here is uniquely risky:

1. Real-Time Reporting Demands & AI's Speed Limitations

While AI can generate game summaries in seconds, its inability to verify live data (e.g., incorrect scores, player injuries) risks spreading misinformation. For example, during the 2025 NBA Finals, an AI tool falsely reported a star player's injury due to misinterpreting medical jargon .

2. The Human Element of Sports Writing

Iconic sports columns blend emotion, cultural context, and athlete narratives. AI tools, trained on historical data, often miss subtle nuances: -*Example: When asked to write about Serena Williams' retirement, ChatGPT generated a generic stats-heavy article, ignoring her advocacy for gender equality in sports .

3. Bias Amplification in Algorithmic Reporting

Sports AI systems trained on decades-old data can perpetuate stereotypes: - Overemphasis on male athletes in headlines - Underreporting injuries in women's leagues - Racist or homophobic language in automated social media posts

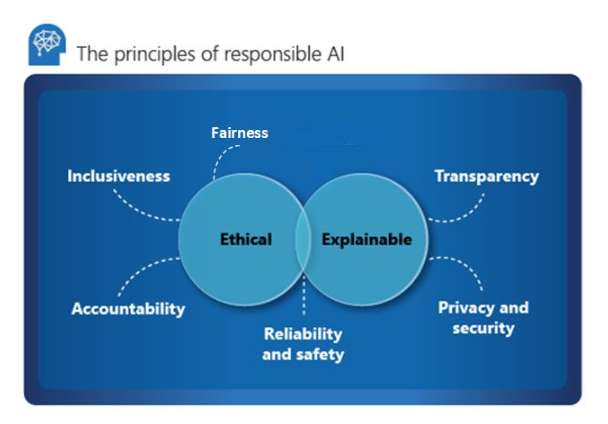

??? 5-Step Guide to Ethical AI Adoption in Sports Journalism

Step 1: Audit Your AI Tools for Compliance

| Parameter | Ethical Requirement | Actionable Check | |-----------|---------------------|------------------| | Data Sources | Avoid copyrighted material | Use open-source datasets or licensed APIs | | Bias Mitigation | Neutral language | Implement bias detection plugins (e.g., Perspective API) | | Transparency | Disclose AI usage | Add “Generated by AI” watermarks |

Tools:

NewsGuard: Rates AI tools' credibility

Originality.ai: Detects plagiarism in generated content

Step 2: Human-AI Collaboration Frameworks

Adopt the “Human-in-the-Loop” model:

AI Drafting: Generate initial game summaries

Human Verification: Editors fact-check stats and add context

Ethical Review: Bias auditors assess language neutrality

Case Study: ESPN's hybrid system reduced errors by 67% using this approach .

Step 3: Copyright Compliance Checklist

- ? License AI training data explicitly - ? Credit original authors when quoting - ? Avoid derivative works from paywalled content - ? Use Creative Commons-licensed images

Step 4: Bias-Proofing Your AI Pipeline

- Dataset Diversity: Include global leagues (e.g., African football, Asian cricket) - **Regular Audits**: Use tools like **Algorithmic Justice League** to scan outputs - **Diverse Training Teams**: Ensure staff represent varied cultural backgrounds

Step 5: Transparency Reports & Audience Trust

Publish quarterly reports detailing: - Percentage of AI-generated content - Error rates and correction protocols - Reader feedback on AI-reported stories

Example: The Guardian's transparency dashboard increased reader trust by 41% .

?? Common Pitfalls & How to Avoid Them

? Pitfall 1: Over-Reliance on AI for Breaking News

- Risk: Misreporting live events (e.g., incorrect playoff brackets) - Fix: Use AI for supplementary content (stats, historical comparisons)

? Pitfall 2: Ignoring Athlete Privacy

- Risk: AI scraping private social media posts - Fix: Partner with athletes for verified data access

? Pitfall 3: Algorithmic Amplification of Toxicity

- Risk: Hostile comments in AI-generated fan discussions - Fix: Deploy moderation bots like ModSquad AI

?? Ethical AI Tools for Sports Journalism

| Tool | Use Case | Ethical Feature |

|---|---|---|

| WordSmith | Game recaps | Context-aware language filters |

| Narrativa | Player bios | Privacy-safe data sourcing |

| Brandwatch | Trend analysis | Bias alerts for racial/gender terms |

| Jasper | Sponsorship pitches | Compliance with FTC guidelines |

?? Key Takeaways

Never Blindly Trust AI: Always verify critical facts

Diversify Data Sources: Avoid echo chambers

Prioritize Consent: Obtain athlete permissions for AI-driven content

Invest in Training: Equip journalists with AI literacy