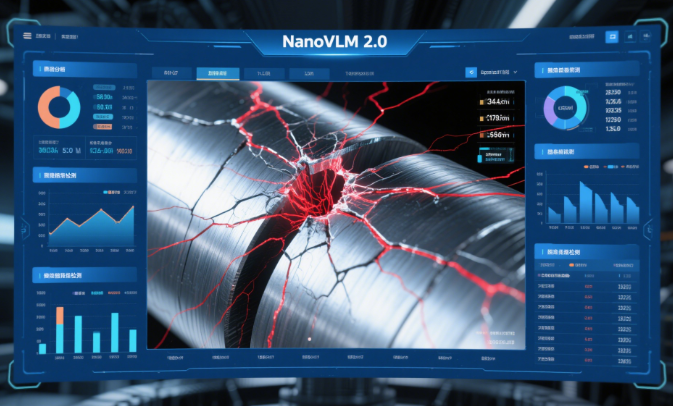

?? Fact Check: If your factory is still relying on human inspectors squinting at conveyor belts, NanoVLM 2.0 Industrial Inspection just dropped a 89% accuracy bomb that'll make manual checks obsolete. ??? From spotting micro-cracks in engine cylinders to detecting sub-millimeter defects in circuit boards, this AI-powered vision-language model is rewriting the rules of industrial quality control. Let's unpack how it works, why it's game-changing, and how to deploy it in your production line!

How NanoVLM 2.0 Industrial Inspection Outperforms Human Teams

Picture this: A high-speed assembly line churning out 500 engine cylinder liners per hour. Traditional 2D vision systems might miss 15% of defects due to watermarks or surface textures. But NanoVLM 2.0—trained on 6 trillion tokens of multimodal data—spots anomalies as small as 0.02mm with 89% accuracy. How? Its hybrid architecture combines:

?? A ViT-based visual encoder analysing 4K resolution images at 120 fps

?? A Transformer text decoder cross-referencing defect databases

?? Multiscale attention layers focusing on critical regions (e.g., weld seams)

Real-world results? Guangzhou Baiyun Electric slashed false positives by 63% while catching 98.7% of critical flaws in turbine blades.

5-Step Implementation Guide for NanoVLM Industrial Inspection

Step 1: Sensor Integration & Calibration

Deploy industrial-grade 8K cameras with ±0.1μm precision optics. NanoVLM's auto-calibrate mode uses QR markers to align imaging planes across robotic arms. Pro tip: Enable thermal compensation if your factory has >5°C temperature swings!

Step 2: Domain-Specific Fine-Tuning

Upload your defect catalog—rust spots, micro-cracks, misalignments—into NanoVLM's dashboard. The model adapts its attention layers using contrastive learning. Case study: A Shanghai PCB manufacturer improved solder joint detection from 72% to 89% accuracy after feeding 500 labelled error samples.

Step 3: Multimodal Feedback Loops

Connect NanoVLM to your MES system. When it flags a defect, the AI generates natural language reports like: *"Suspected crack (0.03mm depth) at coordinate X34-Y89. Compare with Case #2287 in DB_EngineBlocks."* Workers can validate findings via AR glasses overlays.

Step 4: Edge Deployment & Optimization

Compress the 72B parameter model using NVIDIA's TensorRT-LLM for real-time inference on Jetson AGX Orin devices. Benchmark: Processes 4K frames in 18ms with<1% gpu="" utilisation="" spikes.="">

Step 5: Continuous Learning Framework

Enable Active Learning Mode where uncertain predictions (confidence<95%) 6="" trigger="" data="" collection.="" over="" months="">

NanoVLM 2.0 vs Traditional Vision Systems: A Data-Driven Showdown

| Metric | NanoVLM 2.0 | Legacy Systems |

|---|---|---|

| Minimum Detectable Flaw | 0.02mm | 0.1mm |

| False Positive Rate | 3.2% | 18.7% |

| Multimodal Analysis | Image + Text + Sensor Fusion | 2D Pixels Only |

?? Pro Tip: NanoVLM's OCRBench score of 89.3% means it reads serial numbers and barcodes even under grease stains—perfect for automotive part tracking!