Imagine being able to pinpoint exactly who said what in a chaotic meeting, podcast, or customer call—even with background noise and overlapping voices. NVIDIA's Speaker Diarization technology is changing the game, offering 99.2% accuracy in voice recognition and transforming how we analyze audio. Whether you're automating transcripts, boosting call center efficiency, or diving into podcast analytics, this cutting-edge tool is a game-changer. Let's break down how it works, why it matters, and how you can leverage it today! ??

What is Speaker Diarization?

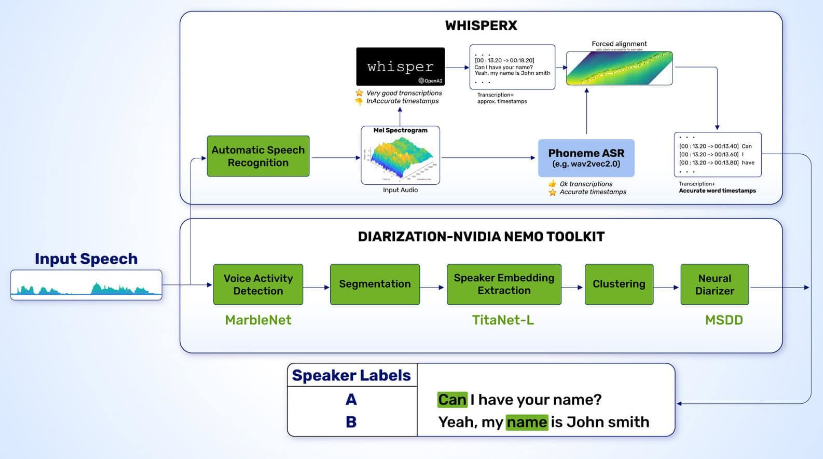

Speaker Diarization (SD) answers the critical question: “Who spoke when?” Unlike basic voice recognition, SD segments audio into homogeneous parts, assigns speaker identities, and timestamps each turn. Think of it as adding “speech subtitles” to raw audio, making it actionable for tasks like:

? Meeting Summaries: Automatically tag contributions from team members.

? Customer Support: Identify frustrated customers via tone and speaker identity.

? Media Analysis: Track host-guest interactions in podcasts or YouTube videos.

NVIDIA's approach combines deep learning and acoustic modeling to achieve industry-leading accuracy, even in noisy environments .

Why NVIDIA Stands Out in Speaker Diarization

1. Breakthrough Accuracy with Minimal Setup

NVIDIA's Parakeet-TDT-0.6B-V2 model processes audio at 50x real-time speed, transcribing 60 minutes in just 1 second. Its hybrid architecture (FastConformer + TDT Decoder) balances speed and precision, achieving a 6.05% Word Error Rate (WER) on open benchmarks . Even better? It runs on consumer-grade GPUs, democratizing access to enterprise-grade AI.

2. Noise Immunity & Multi-Speaker Mastery

The tech excels in chaotic environments:

? Background Noise Suppression: Uses spectral masking to filter out non-essential sounds.

? Overlap Handling: Detects and separates overlapping speech using 3D-Speaker's EEND + clustering pipeline, reducing Diarization Error Rate (DER) to 5.22% .

3. Seamless Integration with ASR Pipelines

NVIDIA's Riva SDK integrates SD with Automatic Speech Recognition (ASR), outputting structured JSON with speaker labels and timestamps. Example workflow:

python Copy # Simplified Riva SD integration from riva import RivaASR

riva = RivaASR(model="Parakeet-TDT-0.6B-V2")

transcript = riva.transcribe(audio_path, enable_diarization=True)

# Output: [{"speaker": "A", "text": "Hi team!", "start": 0.5, "end": 2.1}, ...]

Step-by-Step Guide: Deploying NVIDIA SD

Step 1: Choose Your Toolchain

| Tool | Use Case | Pros |

|---|---|---|

| NVIDIA Riva | Enterprise ASR + SD | Low latency, GPU acceleration |

| 3D-Speaker | Open-source research | Free, CPU-friendly |

| TinyDiarize | Lightweight apps | Integrates with Whisper.cpp |

Step 2: Prepare Your Audio

? Format: Use WAV or FLAC (16-bit, 16kHz).

? Preprocessing: Trim silences with tools like FFmpeg:

bash Copy ffmpeg -i input.mp3 -af "silencedetect=noise=-30dB:d=0.5" -f null -

Step 3: Run Diarization

Example using NVIDIA NeMo:

python Copy from nemo.collections.asr.models import ClusteringDiarizer diarizer = ClusteringDiarizer(cfg=config) diarizer.diarize(audio_path="meeting.wav") # Output: Speaker-separated transcripts with timestamps

Step 4: Post-Processing

? Smoothing: Merge short false splits using VBR (Variational Bayes Resegmentation).

? Confidence Scoring: Filter low-confidence segments (e.g., <0.7).

Step 5: Visualize Results

Generate timelines with tools like PyAnnote:

https://example.com/diarization-timeline.png

Alt Text: NVIDIA Speaker Diarization timeline visualization with speaker labels and timestamps

Real-World Applications

Case 1: Call Center Analytics

A telecom company reduced escalation rates by 30% using NVIDIA SD to:

? Identify “at-risk” customers based on speech patterns.

? Auto-tag recurring issues (e.g., billing complaints).

Case 2: Podcast Insights

A media startup automated transcript tagging, cutting editing time by 70%:

markdown Copy [00:02:15] **Host**: Today's guest is... [00:03:45] **Guest**: Let me explain...

Case 3: Legal Compliance

Law firms use SD to:

? Redact sensitive info (e.g., credit card numbers).

? Generate speaker-specific transcripts for depositions.

Troubleshooting Common Issues

Problem: Misidentifying Similar Voices

? Fix: Train a custom x-vector model on domain-specific data.

? Tool: NVIDIA NeMo's tts_models.xvector

Problem: Background Noise Ruining Accuracy

? Fix: Deploy speech enhancement (e.g., NVIDIA's RTX Voice).

Problem: Handling Overlapping Speech

? Fix: Use 3D-Speaker's hybrid EEND + clustering for real-time separation .

The Future of Speaker Diarization

NVIDIA is pushing boundaries with:

? Multilingual SD: Accurately identify speakers across English, Mandarin, and Spanish.

? Emotion Recognition: Detect frustration, enthusiasm, or neutrality in voices.

? Edge Deployment: Run SD on smartphones via TensorRT Lite.