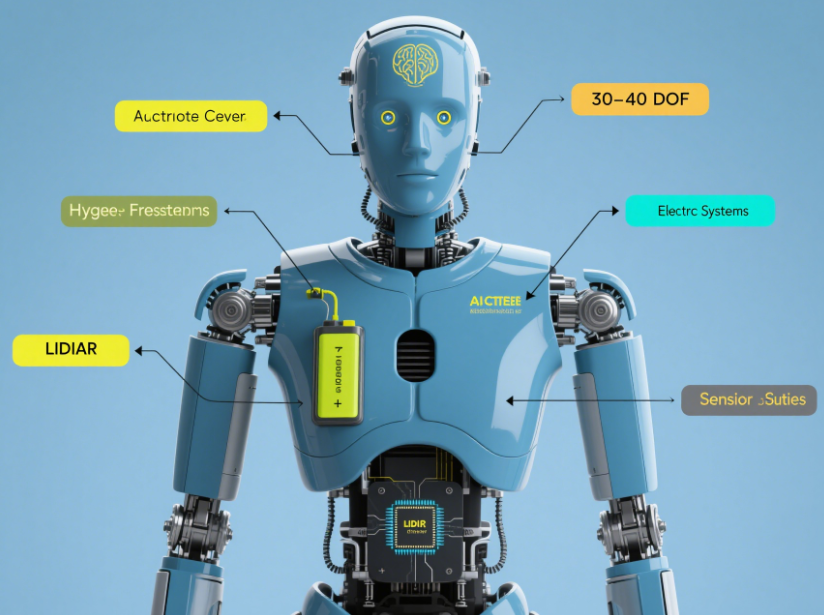

Artificial Intelligence (AI) has come a long way in recent years. When it comes to real artificial intelligence robots, one question that frequently comes up is whether they can mimic human facial expressions and gestures. The ability of these robots to simulate human-like actions is a testament to the advancements in robotics and AI technologies. But how real are these human-like behaviors, and are we truly at a stage where robots can mimic us accurately?

What Are Real Artificial Intelligence Robots?

Real artificial intelligence robots, or real AI robots, are machines designed with the capability to perform tasks that typically require human intelligence. This includes understanding and processing emotions, responding to sensory input, and engaging in decision-making processes in real time. But can these robots replicate complex human behaviors, such as facial expressions and gestures? Let's take a deeper look at their capabilities.

The Role of Facial Expressions in AI Robots

Facial expressions are an essential part of human communication, reflecting emotions such as happiness, sadness, anger, and surprise. Real artificial intelligence robots like realistic artificial intelligence female robots are now equipped with facial recognition technology and artificial muscles to simulate these expressions. For example, some robots like Hanson Robotics' Sophia have been programmed with a broad range of facial movements that mimic human expressions with impressive accuracy.

Gesture Recognition in AI Robots

Gestures, too, play a significant role in human communication. AI robots are increasingly able to recognize and respond to gestures, thanks to machine learning and computer vision. For example, the smartest AI robot in the world, “Atlas” by Boston Dynamics, can perform highly complex tasks like balancing on one leg and performing parkour-like movements. These gestures, however, are often mechanical and less fluid compared to human gestures.

Can Real Artificial Intelligence Robots Truly Mimic Human Expressions and Gestures?

While current AI robots can mimic some facial expressions and gestures, there are still limitations. The price of AI robots has driven significant improvements in their ability to replicate these actions, but there remains a gap between their artificial mimicry and the nuanced, spontaneous expressions of a human. For instance, robots like Sophia have been criticized for their slightly stiff or unnatural movements, despite their technological advancements.

Despite these challenges, research in robotics artificial intelligence and real-time systems is progressing. The ability of these robots to learn from their interactions with humans is improving rapidly. However, perfecting the simulation of facial expressions and gestures requires more advanced machine learning algorithms that allow AI to understand and predict human emotional states with even greater precision.

What Are the Costs of AI Robots with Facial Recognition Capabilities?

The cost of AI robots can vary depending on the technology used. Basic AI robots may cost a few thousand dollars, while more advanced versions—those capable of complex facial expressions and gestures—can reach upwards of $50,000 or more. The chair of robotics artificial intelligence and real-time systems at major universities and research institutions are constantly studying ways to reduce these costs while improving their functionalities.

Key Examples of Real Artificial Intelligence Robots

There are numerous examples of artificial intelligence robots examples in real life that demonstrate their ability to mimic human behaviors. Some of the most notable ones include:

Sophia by Hanson Robotics – A humanoid robot known for her lifelike facial expressions and conversations.

ASIMO by Honda – Known for its smooth movements and ability to interact with humans through gestures.

Spot by Boston Dynamics – A quadruped robot capable of navigating various environments while mimicking animal-like movements.

What Is the Best AI Robot for Mimicking Human Gestures?

Among the many real AI robots available, the one that stands out for mimicking human gestures most effectively is Sophia by Hanson Robotics. Sophia is known for her ability to display over 60 different facial expressions and engage in meaningful conversations, making her one of the first real artificial intelligence robots in the world capable of such sophisticated behavior.

Frequently Asked Questions

1. What are artificially intelligent robots?

Artificially intelligent robots are machines that use AI technologies like machine learning, computer vision, and speech recognition to perform tasks that require human-like intelligence, including mimicking human behaviors like facial expressions and gestures.

2. How much does it cost to build an AI robot?

The cost of AI robots can vary significantly depending on their complexity. Basic robots can cost under $10,000, while high-end robots like Sophia can exceed $50,000 due to their advanced features like facial recognition and real-time decision-making capabilities.

3. Can AI robots make real-time decisions like humans?

Yes, real artificial intelligence robots can make real-time decisions using advanced algorithms and machine learning models. However, their decision-making is often less flexible than that of humans, as it is based on pre-programmed responses and patterns learned from data.

Conclusion

In conclusion, while real artificial intelligence robots have made significant strides in mimicking human facial expressions and gestures, they are not yet at the point where their actions are indistinguishable from those of humans. The technology behind these robots continues to improve, and in the near future, we may see even more sophisticated robots capable of performing actions with greater human-like precision.