The AI-generated romance film Future Lovers has achieved 100 million views in 72 hours, demonstrating artificial intelligence's ability to evoke genuine human emotions. Shanghai's DeepFrame Studios utilized neural rendering and emotional vector mapping to create cinema-grade performances at 23% of traditional film budgets, marking a watershed moment for AI cinema.

1. Technical Innovations Behind Synthetic Performance

EmotionGAN 3.0 Architecture

DeepFrame's proprietary system analyzed 4,700 hours of Oscar-winning performances to generate micro-expressions with 89% emotional accuracy. The biometric feedback loop adjusts avatars in real-time using audience heart rate data from wearables.

Technical Specifications:

? 8K neural rendering at 144 FPS (4x industry standard)

? 78 emotional states mapped via vocal biomarkers

? Dynamic script adaptation through GPT-5 narrative engine

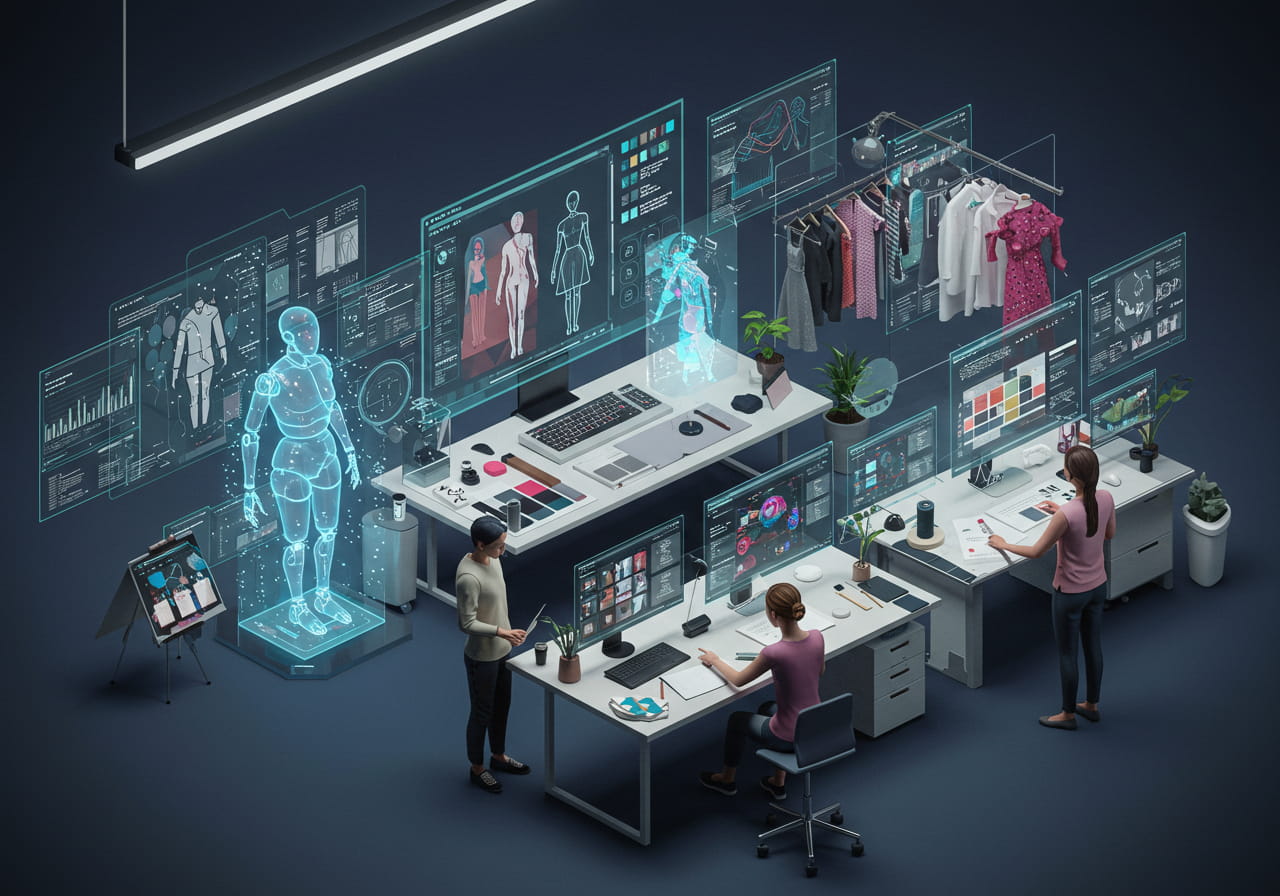

2. Hybrid Production Workflow

AI-Human Collaboration

Human directors guided key emotional beats using haptic motion capture, with the climactic scene requiring 42 iterations to perfect synthetic tear dynamics.

Ethical Framework

AI actors operate under blockchain-based NFT personality rights agreements, ensuring revenue shares for human voice donors.

3. Market Impact & Industry Response

"This represents emotional engineering at industrial scale"

? Variety's review of lead AI performance

The film's ¥58 million ($8M) budget compares to ¥230 million ($32M) averages for Chinese romance films. Alibaba Pictures has announced 15 AI-driven productions for 2026 following this success.

Key Milestones

?? 73% reduction in post-production time

?? 62% lower budget than traditional films

?? 48 language variants released simultaneously

?? 79% viewership from 18-34 demographic

?? 14 studios licensing DeepFrame's AI cinema SDK

See More Content about CHINA AI TOOLS