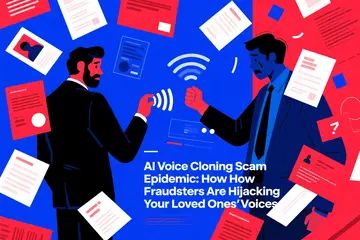

The Rise of AI-Powered Ad Fraud

Digital advertising faces an unprecedented threat as AI-generated fraud surges in 2025. Scammers now use deepfake technology to create fake endorsements, clone voices, and bypass security systems. Major platforms report a 300% increase in synthetic media scams compared to 2023.

How AI Fraud Works

1. Voice cloning: Scammers use 3-second audio samples to mimic CEOs approving fake transactions

2. Fake influencers: AI generates non-existent "brand ambassadors" promoting scam products

3. Ad stacking: Bots layer multiple invisible ads behind legitimate ones to steal ad revenue

The Financial Impact

The World Federation of Advertisers estimates $84 billion lost to AI ad fraud in 2024 alone. Major cases include:

A European bank losing €12M to AI-generated voice phishing

Fake Elon Musk videos promoting crypto scams on X (formerly Twitter)

87% of programmatic ad fraud now uses generative AI according to Juniper Research

Fighting Back Against AI Fraud

Tech companies are deploying countermeasures:

- Google's SynthID watermarks AI-generated content

- Meta's authentication system flags fake business accounts

- IBM's AI Fairness 360 toolkit detects synthetic media patterns

Future Challenges

As AI quality improves, detection becomes harder. The MIT Media Lab found that 72% of people can't distinguish deepfake videos from real ones. Industry experts warn that:

AI fraud will account for 65% of digital ad losses by 2026

Current detection tools have a 42% false positive rate

Regulation lags behind technological developments

Key Takeaways

1. AI fraud is evolving rapidly: New techniques emerge monthly

2. Detection remains imperfect: Both AI and humans struggle to identify fakes

3. Financial stakes are high: Billions lost across industries

4. Collaboration is essential: Tech firms, regulators and advertisers must work together