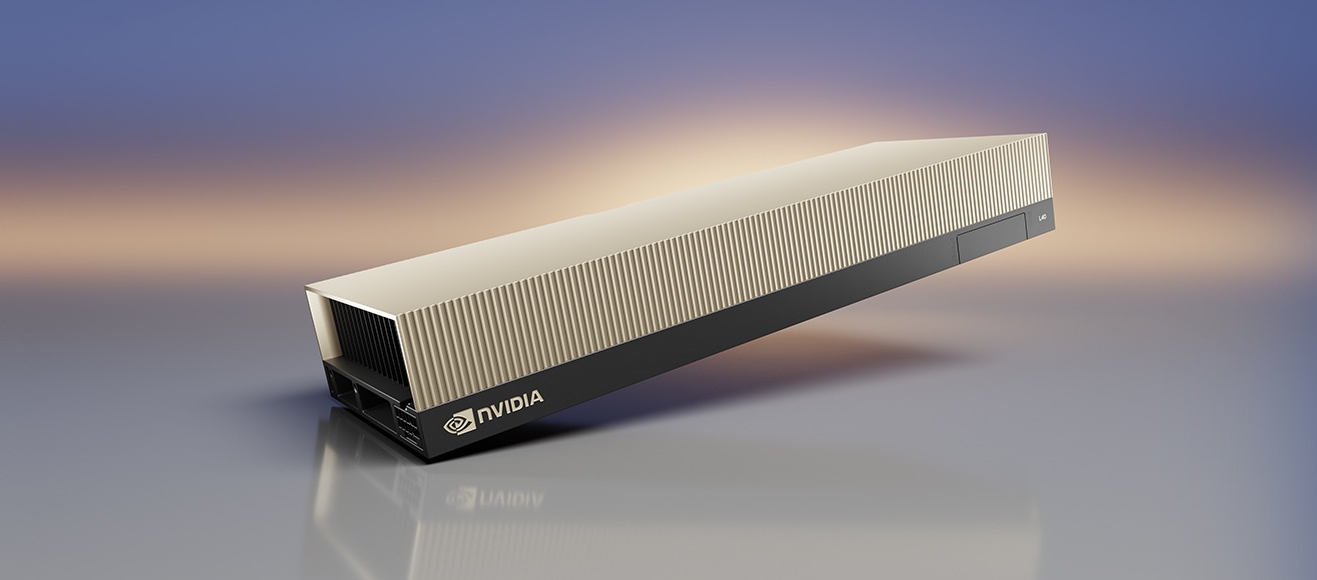

Explore NVIDIA's L40S GPU, a game-changer for edge AI and data centers. Learn its specs, performance benchmarks, and industry impact with Ada Lovelace architecture, 48GB GDDR6 memory, and groundbreaking efficiency.

1. Technical Specifications: The Power Behind the L40S

Launched in August 2023, the NVIDIA L40S GPU is built on the Ada Lovelace architecture, featuring 18,176 CUDA cores, 568 fourth-gen Tensor Cores, and 142 third-gen RT Cores. Its 48GB GDDR6 ECC memory and 864 GB/s bandwidth make it a standout for edge AI and data center workloads. Unlike the H100, which targets hyperscale clouds, the L40S prioritizes PCIe 4.0 compatibility and passive cooling, ideal for distributed environments.

1.1 Performance Benchmarks: Outpacing the A100

The L40S delivers:

1.7× faster AI training and 1.2× faster inference compared to the A100.

212 TFLOPS RT Core performance for real-time ray tracing, doubling A100’s capabilities.

733 TFLOPS FP8 precision via its Transformer Engine, enabling efficient handling of billion-parameter LLMs like GPT-3-40B.

2. Edge AI Revolution: Use Cases and Adoption

Enterprises like Dell, HPE, and Oracle deploy L40S-powered OVX servers for:

Smart Manufacturing: BMW’s Munich plant uses L40S clusters for defect detection, achieving 8ms latency with YOLOv8 models.

Telecom 5G Nodes: Verizon leverages L40S for on-site 4K video analytics, compressing streams 3× faster than H100.

Generative AI: CoreWeave reports 80 images/minute with Stable Diffusion XL in industrial settings.

2.1 Cost Efficiency: Why the L40S Beats A100

40% lower memory costs with GDDR6 vs. HBM.

Passive cooling reduces operational expenses in rugged environments.

PCIe 4.0 x16 ensures compatibility with existing infrastructure.

3. Challenges and Future Roadmap

While the L40S excels in inference and edge workloads, its limitations include:

No NVLink support, limiting scalability in large clusters.

48GB memory bandwidth trails A100's 2039 GB/s for LLM training.

NVIDIA's 2025 roadmap addresses these gaps with:

PCIe 5.0 integration for 128 GB/s throughput.

Expanded vGPU support for up to 48 partitioned instances.

4. Industry Reactions and Strategic Impact

Analysts like Ming-Chi Kuo highlight the L40S's role in democratizing edge AI. Oracle's Compute Cloud@Customer uses L40S clusters to comply with data sovereignty laws while reducing latency. Meanwhile, startups report 40% faster development cycles using L40S-powered AI assistants.

Key Takeaways

? Edge Dominance: Optimized for latency-sensitive AI with passive cooling and PCIe 4.0.

?? Cost-Effective: $13K price tag with 40% lower memory costs than HBM-based GPUs.

?? Versatility: Excels in generative AI, 3D rendering, and real-time analytics.