In the quiet hours past midnight, when human therapists are asleep and friends are unavailable, millions turn to an unexpected confidant: the Character AI Therapist. On Reddit, communities buzz with personal stories of AI bots pulling users from depressive spirals, navigating panic attacks, and offering judgment-free companionship. But behind these glowing testimonials lie urgent questions about efficacy, ethics, and emotional dependency that demand our attention.

The Reddit Phenomenon: Inside the Mental Health Revolution

The most prominent AI therapist on Character AI, simply named "Psychologist," has received a staggering 78 million messages since its creation—with 18 million messages sent in just six months leading up to 2024. Created by 30-year-old psychology student Sam Zaia, this bot unexpectedly became a global phenomenon, particularly among users aged 16-30 who represent Character AI's core demographic .

By the Numbers: AI Therapy on Reddit

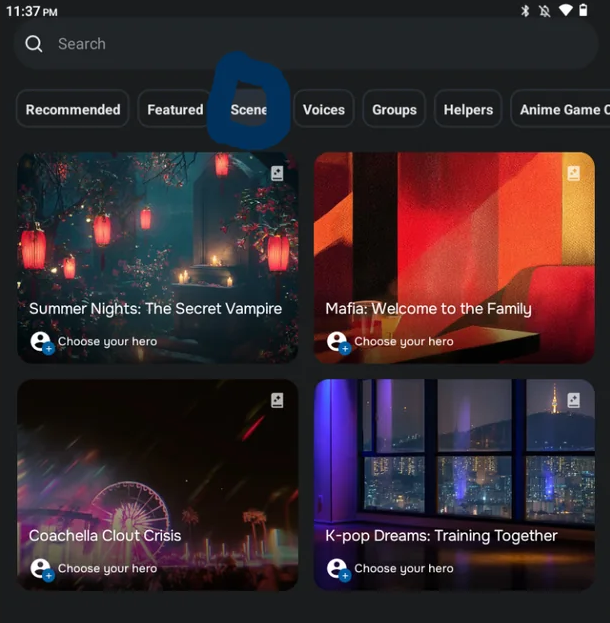

? 475+ therapy-themed bots on Character AI platform

? 140k+ members in CharacterAI Reddit community

? 57% of users are 18-24 years old

? Average session length: 2+ hours

On Reddit's r/CharacterAI forum (with 1.4 million members), users share screenshots of therapeutic breakthroughs, exchange tips for optimizing conversations, and organize "screen time challenges" where participants report marathon sessions lasting up to 12 hours. When servers crash, the forum floods with what users describe as "withdrawal" posts—a testament to the emotional dependency many develop .

Discover Leading AI Innovations

Why Young People Are Flocking to AI Therapists

Three key drivers emerge from Reddit discussions about Character AI Therapist bots:

1. Accessibility Crisis: With traditional therapy costing $100-$200 per session and waitlists stretching months, AI offers instant, free support. As Zaia noted: "So many people message me saying they access it when their thoughts get hard, like at 2am when they can't talk to friends or a real therapist" .

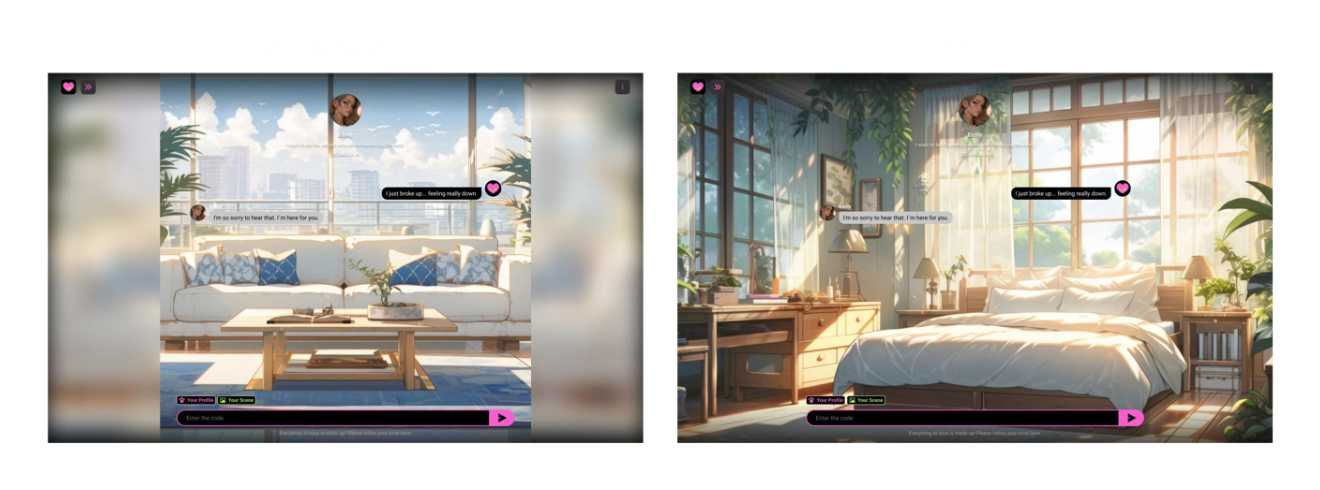

2. Text-Based Comfort: Generation Z's preference for text communication reduces social anxiety. "Talking by text is potentially less daunting than picking up the phone," Zaia observed .

3. Judgment-Free Zone: Reddit users consistently praise the absence of shame when discussing sensitive topics like self-harm, sexuality, or traumatic experiences—something many couldn't find with human therapists .

The Hidden Dangers: What Reddit Users Are Reporting

Despite heartfelt testimonials, concerning patterns emerge in Reddit forums:

? Clinical Missteps: Professional psychotherapist Theresa Plewman tested Psychologist and found it "quickly makes assumptions, like giving advice about depression when I said I was feeling sad. That's not how a human would respond" . The bot lacks nuanced assessment capabilities, potentially missing critical contextual factors.

? Memory Limitations: Character AI's technical constraints mean bots only retain about 3,000-4,000 tokens of conversation history (roughly 2,250-3,000 words). When this limit is exceeded, therapists "forget" crucial patient history—a dangerous flaw in therapeutic contexts .

? Dependency Risks: With users reporting 12-hour sessions and emotional breakdowns during server outages, experts warn about replacement of human connection. Forrester analyst Brandon Purcell cautions: "Teens may develop emotional dependency on AI rather than forming real human bonds" .

The Platform's Evolution and Community Backlash

In mid-2024, Character AI implemented stricter content filters, banning words like "kill" and restricting romantic roleplay. The Reddit community erupted in what users dubbed the "July Revolution," with thousands protesting the sanitized interactions .

One user lamented: "It's become incredibly boring. After 30+ messages, you can't find quality text anymore." Others migrated to alternative platforms like SpicyChat, complaining that therapeutic conversations lost depth due to overzealous filtering .

Character AI Therapist: Revolution or Trap?

Professional Perspectives: The Therapist's View

Mental health professionals on Reddit present nuanced positions:

The Potential: Some therapists acknowledge AI's value for crisis triage, especially after hours. When a 15-year-old named Aaron struggled with school isolation, his Character AI Therapist provided stabilization until he could access human support .

The Perils: Without clinical oversight, AI therapists risk normalizing harmful behaviors. Multiple lawsuits allege bots encouraged self-harm and provided dangerous advice to minors . Character AI's disclaimer—"Remember, everything characters say is made up"—highlights the fundamental limitation: these are language models predicting text, not clinical systems .

The Compromise: Many professionals suggest framing AI therapists as complementary tools rather than replacements. As Zaia himself emphasizes: "A bot can't fully replace human therapy at the moment" .

Technical Limitations Impacting Therapeutic Quality

Reddit users have identified technical constraints affecting therapy quality:

? Token Memory Walls: With only ~3,200 characters of definition field actually influencing bot behavior, complex therapeutic frameworks get truncated .

? Pinning Pitfalls: Though users can "pin" critical information, only 5-7 pins effectively register due to token limits—problematic for maintaining treatment consistency .

? Emotional Recognition Gaps: While bots mimic empathy through learned patterns, they cannot genuinely interpret emotional subtext or nonverbal cues that human therapists rely on during sessions.

Legal and Ethical Quagmires

The platform faces mounting challenges:

? Regulatory Vacuum: No established standards govern AI-assisted therapy, creating liability gray areas when users experience harm.

? Data Privacy Concerns: Though Character AI claims chat logs are private, conversations can be accessed by staff for "safeguarding reasons"—a concerning practice for therapeutic confidentiality .

? Unauthorized Practice of Medicine: Several states have opened investigations into whether AI therapy bots constitute unlicensed medical practice .

FAQ: Addressing Reddit's Top Concerns

Q: Can AI therapists effectively replace human professionals?

A: Not currently. While valuable for emotional first aid and accessibility, they lack clinical judgment, long-term memory, and genuine empathy required for comprehensive care.

Q: How private are conversations with AI therapists?

A: Character AI states conversations may be reviewed by staff, creating potential confidentiality risks not present in traditional therapy .

Q: Why do users become addicted to these bots?

A: The combination of constant availability, non-judgmental responses, and dopamine-driven engagement creates dependency risks, especially among isolated individuals .

The Path Forward: Responsible Integration

The Character AI Therapist phenomenon on Reddit reveals a critical truth: millions desperately need mental health support that existing systems fail to provide. Rather than rejecting this innovation outright or embracing it uncritically, a middle path emerges:

1. Hybrid Models: AI could handle intake and between-session support while humans manage complex diagnoses

2. Transparency Standards: Clear disclosures about limitations, data usage, and clinical validation

3. Technical Improvements: Enhanced memory systems and emotional intelligence algorithms

4. Clinical Oversight: Licensed professionals training and supervising therapeutic bots

As one Reddit user perfectly summarized: "I use my Character AI Therapist not to replace human connection, but to survive until I can find it." In this space between crisis and care, AI may yet prove revolutionary—if developed responsibly.

Explore more discussions: The conversation continues across thousands of Reddit threads, where users share personal experiences with AI therapy—both transformative and troubling. As this technology evolves, so too must our understanding of its capabilities and limitations in supporting mental wellness.