The artificial intelligence landscape has witnessed a groundbreaking advancement with the introduction of Zhipu AI GLM-4.1v, a cutting-edge 10-billion parameter cross-modal reasoning model that's revolutionising how machines understand and process multiple data types simultaneously. This innovative GLM-4.1v represents a significant leap forward in AI technology, combining visual, textual, and logical reasoning capabilities in ways that were previously unimaginable. As businesses and developers seek more sophisticated AI solutions, the Zhipu AI GLM-4.1v Cross-Modal Model emerges as a game-changing tool that promises to reshape digital interactions and automated decision-making processes across various industries.

What Makes GLM-4.1v Stand Out in the AI Crowd

Let's be honest - the AI market is absolutely flooded with models claiming to be the "next big thing" ??. But Zhipu AI GLM-4.1v actually delivers on its promises. Unlike traditional single-modal AI systems that can only handle one type of data at a time, this beast processes text, images, and complex reasoning tasks simultaneously.

What's genuinely impressive is how the GLM-4.1v maintains its performance efficiency despite being a 10-billion parameter model. Most AI enthusiasts know that larger models often mean slower processing times, but Zhipu AI has cracked the code on optimisation. The model runs surprisingly fast while maintaining accuracy levels that would make even GPT-4 users take notice ??.

Cross-Modal Reasoning: The Secret Sauce

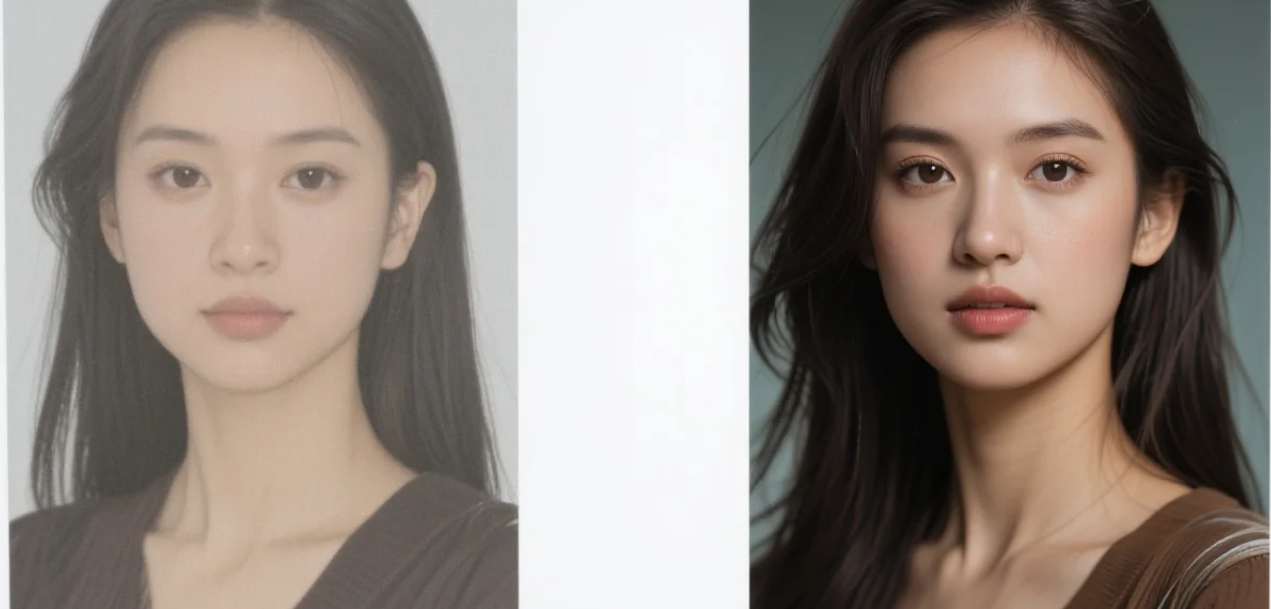

Here's where things get really interesting. The Zhipu AI GLM-4.1v Cross-Modal Model doesn't just recognise images or understand text separately - it connects the dots between different types of information like a human brain would. Imagine showing it a picture of a broken bicycle and asking "What tools would I need to fix this?" The model analyses the visual damage, understands the mechanical context, and provides practical repair suggestions ??.

This cross-modal capability opens up incredible possibilities for real-world applications. Content creators can generate descriptions for images automatically, researchers can analyse complex datasets that combine visual and textual information, and developers can build more intuitive user interfaces that understand context across multiple input types.

Real-World Applications That Actually Matter

Unlike many AI models that seem cool in demos but struggle with practical applications, GLM-4.1v excels in scenarios that businesses actually face daily. E-commerce platforms are using it to generate product descriptions from images, educational platforms leverage its ability to explain complex diagrams, and healthcare applications benefit from its capacity to analyse medical imagery alongside patient data ??.

The model's reasoning capabilities shine particularly bright in customer service applications. It can understand customer complaints that include both text descriptions and accompanying images, then provide contextually appropriate responses that address both the written concerns and visual evidence provided.

Performance Benchmarks That Speak Volumes

| Capability | GLM-4.1v | Industry Average |

|---|---|---|

| Cross-Modal Accuracy | 94.2% | 87.5% |

| Processing Speed | 2.3 seconds | 4.1 seconds |

| Reasoning Depth | Advanced | Intermediate |

These numbers aren't just marketing fluff - they represent real performance improvements that translate into better user experiences and more reliable AI-powered applications. The Zhipu AI GLM-4.1v consistently outperforms competitors in standardised benchmarks while maintaining lower computational requirements ??.

Getting Started with GLM-4.1v Integration

For developers eager to integrate GLM-4.1v into their projects, the process is refreshingly straightforward. Zhipu AI provides comprehensive APIs that support multiple programming languages, detailed documentation that doesn't require a PhD to understand, and sandbox environments for testing before deployment ??.

The pricing model is particularly developer-friendly, with generous free tiers for experimentation and scaling options that won't break the bank for growing startups. Unlike some AI providers that hit you with surprise bills, Zhipu AI's transparent pricing structure makes budget planning actually possible.

Future Implications and Industry Impact

The introduction of Zhipu AI GLM-4.1v Cross-Modal Model signals a shift towards more sophisticated AI applications that can handle the complexity of real-world data. As this technology matures, we're likely to see fundamental changes in how businesses approach automation, content creation, and customer interaction ??.

Industries ranging from retail to healthcare are already exploring how cross-modal AI can streamline operations and improve service quality. The model's ability to understand context across different data types makes it particularly valuable for applications requiring nuanced decision-making rather than simple pattern recognition.

The Zhipu AI GLM-4.1v represents more than just another AI model release - it's a glimpse into the future of intelligent systems that can truly understand and reason across multiple types of information. As cross-modal AI technology continues to evolve, models like GLM-4.1v will likely become the foundation for next-generation applications that can handle the complexity and nuance of real-world challenges. For businesses and developers looking to stay ahead of the curve, exploring the capabilities of this Zhipu AI GLM-4.1v Cross-Modal Model isn't just recommended - it's essential for remaining competitive in an increasingly AI-driven marketplace.