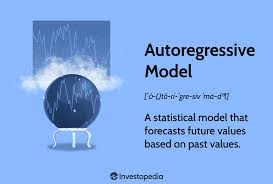

Introduction: Why Autoregressive Models Matter in AI Music

Autoregressive models are at the heart of some of the most advanced tools for music generation. If you’ve ever used an AI to generate a melody that builds progressively note-by-note—or chord-by-chord—you’ve likely seen an autoregressive system in action.

In the context of AI music, to create AI music with autoregressive models means generating each musical element based on the ones that came before. It’s a bit like how we write sentences: each word depends on the previous one.

But how does this concept apply to music, and how can you use it effectively? Let’s unpack the tech behind it, examine real tools powered by autoregression, and give you actionable ways to start generating music using this intelligent approach.

Key Features of Autoregressive Models in Music Generation

Sequential Note Prediction

Autoregressive models generate music one token at a time—whether that token is a note, a chord, or a snippet of audio. This sequential generation allows for coherent melodic and rhythmic patterns.Long-Term Musical Structure

Because each output is conditioned on the history of previous tokens, the model can build repeating motifs, resolve harmonic tension, or develop themes across time.Flexible Representation

These models can work on:Symbolic input (e.g., MIDI or ABC notation)

Raw audio (using techniques like waveform sampling)

Spectrograms (for audio synthesis like in Jukebox or Riffusion)

Transformer-based Architecture

Modern autoregressive music models often rely on transformers—especially the decoder-only variant seen in GPT-style models. This architecture handles long dependencies far better than older RNNs.Human-like Creativity

The outputs tend to mimic the style, tempo, and musical rules found in the training data. With proper tuning, results often sound strikingly human.

Real Autoregressive Models That Generate AI Music

MuseNet (OpenAI)

Trained on MIDI data across multiple genres.

Can generate up to 4-minute compositions with 10 instruments.

Outputs symbolic music, ideal for digital composition.

Music Transformer (Magenta)

One of the first transformer-based autoregressive models for symbolic music.

Known for generating long, structured piano pieces.

Open-source and customizable.

Jukebox (OpenAI)

A raw audio autoregressive model.

Trained on 1.2M songs with lyrics and metadata.

Can produce singing voices, genre-accurate harmonies, and highly expressive audio.

DeepBach (Sony CSL)

Specializes in Bach-style chorales.

Outputs MIDI that mimics real baroque harmony and counterpoint.

Designed to be musically explainable and editable.

Pros and Cons of Using Autoregressive Models to Create AI Music

| Pros | Cons |

|---|---|

| Can learn and emulate complex musical structure | Slow generation speed, especially for audio |

| Works well with minimal input or prompts | Prone to repetition or “l(fā)ooping” without fine-tuning |

| Compatible with a wide range of genres | May require coding knowledge or setup |

| Enables highly coherent melodies and progressions | Limited real-time generation capability in most cases |

Use Cases: Where Autoregressive AI Music Models Shine

Composing Film Scores

AI can extend a human-made melody or chord progression into a full-length orchestral score.Music Education Tools

Platforms powered by these models help students see how music evolves note by note, providing real-time feedback.Creative Collaborations

Artists use models like MuseNet to generate base tracks and then edit them in a DAW.Background Audio for Content

Symbolic outputs from Music Transformer or DeepBach are easy to adapt into game music, YouTube scores, or podcasts.Music Theory Analysis

Autoregressive models trained on classical music can shed light on compositional structure and pattern formation.

How to Create AI Music with Autoregressive Models (Step-by-Step)

Choose a Platform or Tool

For symbolic generation, use:For raw audio, try:

Jukebox (requires GPU setup or HuggingFace API wrapper)

MuseNet (via OpenAI API)

Magenta’s Music Transformer (via Colab notebooks)

AIVA (uses a hybrid of autoregressive models)

Input Your Seed

Start with a simple melody, a chord progression, or even a few lyrics (for Jukebox). The model will continue from there.Adjust Generation Parameters

Tweak temperature (for creativity), length, and instrument settings. Higher temperature = more experimental outputs.Generate and Review

Let the model complete the piece. With MIDI models, export to a DAW to polish. With raw audio, edit with tools like Audacity.Refine Output

AI music is rarely perfect on the first pass. Edit the melody, shift timing, or change instrumentation.

Comparison Table: Autoregressive vs Non-Autoregressive AI Music Models

| Feature | Autoregressive | Non-Autoregressive |

|---|---|---|

| Output Flow | Token by token | Parallel (often full clip) |

| Examples | MuseNet, Jukebox, DeepBach | DiffWave, Riffusion |

| Strengths | Musical coherence, logical phrasing | Fast generation, modern synthesis |

| Limitations | Slow generation, memory intensive | May lack long-term structure |

| Control | High with prompts | Lower unless fine-tuned |

Frequently Asked Questions

What is an autoregressive model in AI music?

It’s a type of model that generates each musical token based on the previous ones, mimicking how music builds naturally over time.

Can I use autoregressive models without coding?

Yes. Platforms like AIVA or MuseNet via web interfaces allow music creation without any technical skills.

Which is better: MuseNet or Jukebox?

MuseNet is better for editable MIDI files. Jukebox is ideal if you want full audio with lyrics, but it’s more resource-intensive.

Are the outputs royalty-free?

Depends on the platform. MuseNet outputs are typically royalty-free, but Jukebox’s training data may have copyright restrictions.

Do these models support live music generation?

Not reliably. Autoregressive models are often too slow for real-time use unless optimized significantly.

Conclusion: Building Musical Futures One Note at a Time

To create AI music with autoregressive models is to engage in a form of digital composition where the machine listens to its own memory, predicts what comes next, and transforms data into expressive sound.

From MuseNet’s MIDI symphonies to Jukebox’s genre-blending audio masterpieces, autoregressive models offer unparalleled musical flow and realism. While slower and more compute-heavy than diffusion-based models, they excel at producing music that feels like it has a soul.

Whether you’re a hobbyist, a film composer, or a curious technologist, now is the perfect time to dive into the world of autoregressive AI music and discover how machines are learning to think in melody.

Learn more about AI MUSIC TOOLS