The rise of AI-driven platforms like Character AI has sparked a heated debate: should users have the freedom to bypass the C.ai Filter to unleash their creativity, or do these filters serve a critical role in ensuring safety and ethical content? This question touches on user rights, platform responsibilities, and the philosophical balance between freedom and control. From crafting Claymation AI Filter scenarios to exploring Cartoon AI Filter narratives, users crave creative expression, but bypassing filters raises concerns about harmful content. This article dives into the ethics of Character AI Filter Bypass, exploring both sides of this complex debate.

Understanding the C.ai Filter: Purpose and Functionality

The C.ai Filter is designed to moderate content on Character AI, a platform where users interact with AI-driven characters. It flags or blocks inappropriate content, such as explicit language, violence, or sensitive themes, to maintain a safe environment. For example, attempts to create mature Clay AI Filter or Chubby AI Filter scenarios may trigger restrictions, as the system aims to prevent misuse. The filter uses advanced algorithms to detect context, but it’s not flawless—sometimes, innocent creative prompts get caught, frustrating users. To learn more about how these filters work, check out our detailed post: Unveiling the C AI Filter.

The Case for Creative Freedom: Why Users Want to Break the C AI Filter

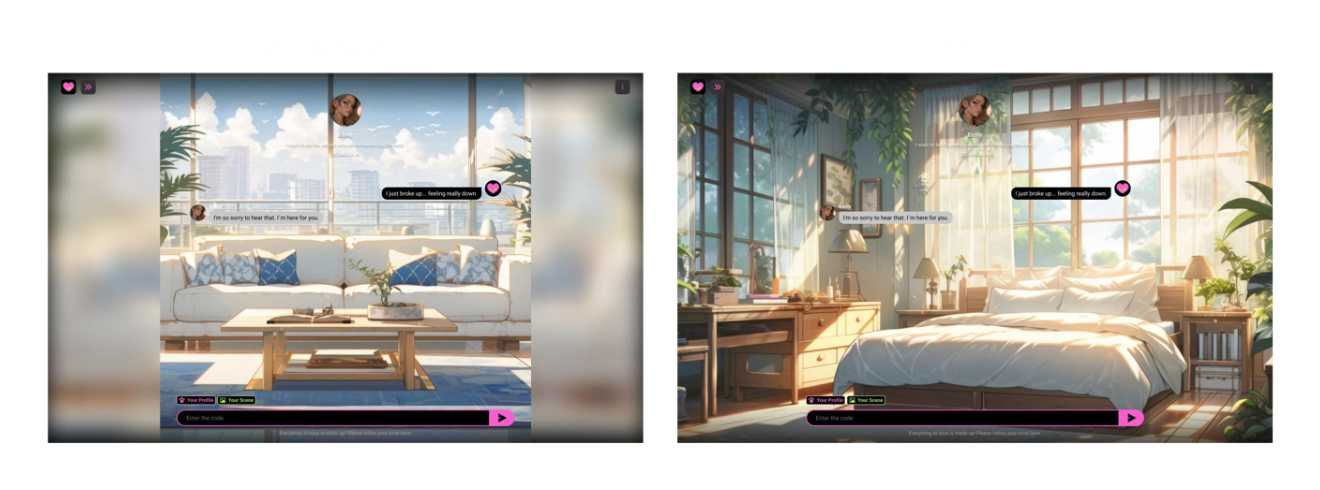

Creative users argue that the C.ai Filter stifles artistic expression. For instance, a writer crafting a gritty Claymation AI Filter storyline or a humorous Cartoon AI Filter dialogue may find their work flagged due to overly cautious moderation. The desire for Character AI Filter Bypass stems from a need to explore complex themes—such as mature narratives or culturally nuanced characters—without artificial constraints. Users claim that AI platforms should trust them to responsibly push boundaries, especially in fictional contexts. This perspective emphasizes user autonomy and the right to create without overreach from platform policies.

Philosophical Angle: The Right to Create

At its core, the push to C AI Filter Break reflects a deeper philosophical question: who controls creative output in the digital age? Users argue that AI platforms, as tools, should serve as enablers of imagination, not gatekeepers. For example, a user designing a Chubby AI Filter character for a body-positive story may feel unfairly restricted if the filter misinterprets their intent. This raises concerns about whether platforms prioritize safety over user empowerment, potentially undermining the democratizing potential of AI technology.

The Case for Safety: Why the C.ai Filter Exists

On the other side, platforms like Character AI have a responsibility to protect users and prevent harm. Unfiltered AI interactions could lead to the creation of harmful content, such as explicit or offensive material disguised as creative works. For instance, without the C.ai Filter, a Claymation AI Filter prompt could inadvertently generate content that violates community standards. Filters also protect younger users and ensure compliance with legal regulations. Bypassing these safeguards risks creating an unsafe digital space, where malicious actors could exploit unmoderated systems.

Challenges of Context-Aware Moderation

One major hurdle for the C.ai Filter is its struggle to understand context. A Cartoon AI Filter prompt meant for satire might be flagged as inappropriate, while harmful content could slip through if worded cleverly. Developing filters that accurately distinguish between creative and harmful intent is a technical and ethical challenge. Overly strict moderation can alienate users, while lax systems risk abuse. This tension fuels the debate over Character AI Filter Bypass and highlights the need for smarter, more nuanced AI moderation.

The Ethical Dilemma: Balancing Freedom and Responsibility

The ethics of C AI Filter Break hinge on a delicate balance. On one hand, users deserve creative freedom to explore diverse ideas, from Clay AI Filter animations to Chubby AI Filter character designs. On the other, platforms must mitigate risks of harmful content that could affect users or violate laws. Bypassing filters might empower individual creativity but could also normalize unethical AI use, such as generating harmful stereotypes or explicit material. The debate isn’t just technical—it’s a question of values, trust, and the role of AI in society.

For a broader look at AI character platforms and their policies, visit our homepage: AI Character Hub.

Unique Perspective: The User Rights Philosophy

A rarely discussed angle is the concept of “user rights” in AI interactions. Should users have a fundamental right to unrestricted AI access, akin to free speech, or do platforms have a duty to act as moral arbiters? The push for Character AI Filter Bypass reflects a broader movement for digital sovereignty, where users demand control over their AI experiences. However, this clashes with the platform’s role as a steward of community safety. This philosophical tug-of-war could shape future AI policies, as users and developers negotiate the boundaries of creativity and accountability.

Potential Harms of Unfiltered AI

Unfiltered AI systems could amplify harmful biases or enable malicious use. For example, a Claymation AI Filter prompt might unintentionally perpetuate stereotypes if not carefully moderated. Similarly, unrestricted Cartoon AI Filter content could be misused to create offensive or illegal material. Studies from AI ethics research, such as those by the AI Now Institute, suggest that unmoderated AI can exacerbate social inequalities or enable harmful narratives. These risks underscore why platforms enforce strict filters, even if they sometimes hinder legitimate creativity.

Conclusion: Finding a Middle Ground

The debate over bypassing the C.ai Filter is far from black-and-white. Creative users rightfully seek freedom to explore Claymation AI Filter, Cartoon AI Filter, or Chubby AI Filter scenarios, but platforms must prioritize safety and ethical standards. A potential solution lies in smarter, context-aware filters that allow nuanced creativity while blocking harmful content. As AI technology evolves, so too must the conversation around user rights and platform responsibilities. For now, users and developers must navigate this ethical minefield together, balancing innovation with accountability.

Frequently Asked Questions

What is the C.ai Filter and why does it exist?

The C.ai Filter is a content moderation system on Character AI that blocks inappropriate or harmful content, such as explicit language or sensitive themes, to ensure a safe user environment.

Can bypassing the C AI Filter Break harm the platform?

Yes, bypassing the C.ai Filter can lead to the creation of harmful or illegal content, potentially violating community standards and exposing users to unsafe interactions.

Why do some users want to use Character AI Filter Bypass for creative projects?

Users seek to bypass filters to explore complex or mature themes, like Claymation AI Filter or Cartoon AI Filter scenarios, which may be flagged despite being harmless creative expressions.

How can platforms improve the C.ai Filter to balance creativity and safety?

Platforms can invest in context-aware AI moderation that better distinguishes between creative and harmful content, reducing false flags while maintaining safety standards.