Witness the groundbreaking advancement in artificial intelligence and robotics as the MindLoongGPT 7B Parameter AI Model demonstrates unprecedented capabilities in controlling humanoid robot movements with remarkable precision and adaptability. This innovative language model, specifically optimized for robotic control applications, represents a significant leap forward in human-machine interaction by translating natural language commands into complex physical movements. Researchers have successfully implemented this 7 billion parameter foundation model to enable humanoid robots to perform intricate tasks ranging from delicate object manipulation to fluid locomotion across varied terrains, opening new possibilities for applications in manufacturing, healthcare, disaster response, and everyday assistance.

MindLoongGPT 7B Parameter AI Model: Revolutionizing Humanoid Robot Control Systems

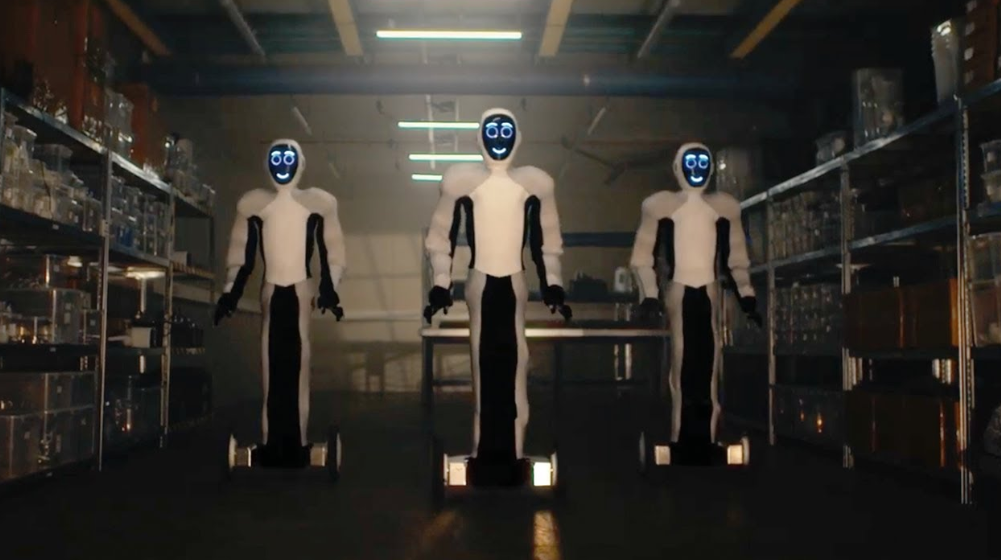

The robotics world is absolutely buzzing with excitement! ?? A groundbreaking development has emerged that's set to transform how humanoid robots interact with the physical world. The MindLoongGPT 7B Parameter AI Model has demonstrated unprecedented capabilities in controlling complex humanoid robot movements through natural language processing and advanced motor control algorithms.

Unlike previous approaches that required extensive programming for each specific movement, this revolutionary AI model can interpret general commands and translate them into precise, coordinated actions. Imagine simply telling a robot to "carefully pick up that fragile vase and place it on the high shelf" – and watching as the machine executes the task with human-like dexterity and spatial awareness!

The key innovation behind MindLoongGPT lies in its unique architecture that bridges the gap between language understanding and physical control. Traditional language models excel at generating text but struggle to connect words with physical actions in three-dimensional space. MindLoongGPT was specifically designed to overcome this limitation through an innovative approach that maps linguistic concepts directly to motor control parameters.

During a recent demonstration at the International Robotics Summit, observers watched in amazement as a humanoid robot controlled by MindLoongGPT navigated a complex obstacle course, responded to unexpected changes in its environment, and performed tasks requiring fine motor skills – all directed through conversational commands rather than pre-programmed routines.

"What we're witnessing is a fundamental shift in human-machine interaction," explained Dr. Lin Wei, lead researcher on the project. "Previous systems required humans to learn the robot's language through specific commands or programming interfaces. With MindLoongGPT, the robot learns to understand human language instead, making the technology accessible to non-specialists."

The implications for industries ranging from manufacturing to healthcare are profound. Robots equipped with this technology could assist surgeons in operating rooms, help elderly individuals with daily tasks, work alongside humans in complex manufacturing environments, or venture into disaster zones too dangerous for human responders – all while being controlled through intuitive verbal instructions.

How the MindLoongGPT 7B Parameter AI Model Translates Language to Physical Movement

Let's dive deeper into the fascinating technical architecture that makes this breakthrough possible! ?? The MindLoongGPT 7B Parameter AI Model represents a significant evolution in how artificial intelligence systems interpret language and translate it into physical actions.

At its core, MindLoongGPT builds upon transformer-based language model architecture, similar to other large language models, but with crucial modifications specifically designed for robotic control applications. The "7B parameter" designation refers to the approximately 7 billion mathematical parameters that define the model's neural network – a size carefully optimized to balance sophisticated reasoning capabilities with the practical constraints of real-time robotics applications.

The system operates through a multi-stage process that transforms natural language into robotic movement:

Stage 1: Contextual Understanding

When a command is issued, MindLoongGPT first processes the language to understand not just the literal instruction but the contextual implications. For example, if instructed to "hand me that cup," the system recognizes that this requires identifying the cup, understanding where "me" is located, determining the appropriate grip strength for a cup (versus, say, a brick), and planning a trajectory that avoids obstacles.

This contextual understanding is achieved through a specialized attention mechanism that prioritizes spatial and physical relationships in the language processing stage. The model was trained on a massive corpus that included not just text but paired language-action datasets, allowing it to develop strong associations between verbal descriptions and physical movements.

Stage 2: Environmental Mapping

Once the instruction is understood, MindLoongGPT integrates data from the robot's sensors to create a real-time environmental map. This includes visual information from cameras, spatial data from LIDAR or depth sensors, and proprioceptive feedback that indicates the robot's current physical state.

What makes this stage particularly impressive is the model's ability to reconcile linguistic concepts with visual perception. If told to "move the red box," the system can identify objects in its visual field that match the description "red box" even if it has never seen that specific box before, demonstrating true generalization capabilities rather than mere memorization of objects.

Stage 3: Motion Planning

With a clear understanding of both the command and the environment, MindLoongGPT generates a detailed motion plan. This isn't simply a matter of plotting a straight-line path; the system considers joint constraints, balance requirements, optimal energy usage, and safety parameters.

The motion planning module benefits from extensive reinforcement learning training in simulated environments, where the AI learned to optimize movements through millions of virtual trials before ever controlling a physical robot. This simulation-to-reality pipeline allowed researchers to safely train the system on challenging scenarios that would be impractical or dangerous to attempt with physical hardware during the development phase.

Stage 4: Execution and Adaptation

As the robot begins executing the planned movements, MindLoongGPT continuously monitors progress through a feedback loop, comparing expected outcomes with actual sensor data. If discrepancies arise – perhaps an object is heavier than anticipated, or slightly moves during an approach – the system can dynamically adjust its plan in real-time.

This adaptive capability represents one of the most significant advances over previous systems, which typically followed rigid execution patterns and struggled to recover from unexpected situations. MindLoongGPT's approach more closely mimics human movement control, which seamlessly integrates planning with continuous sensory feedback.

| Capability | MindLoongGPT 7B | Previous Robot Control Systems |

|---|---|---|

| Command Interface | Natural language | Specialized programming or limited command sets |

| Adaptability to New Tasks | Can perform novel tasks from verbal descriptions | Requires reprogramming for new tasks |

| Real-time Adaptation | Continuous adjustment based on feedback | Limited or no real-time adaptation |

| Generalization | Can apply learned skills to new situations | Limited to specifically programmed scenarios |

The technical achievements behind MindLoongGPT represent years of interdisciplinary research combining insights from linguistics, robotics, computer vision, and reinforcement learning. The result is a system that doesn't just bridge the gap between language and physical action – it fundamentally reimagines how humans can communicate with and control sophisticated machines.

Real-World Applications of the MindLoongGPT 7B Parameter AI Model in Robotics

The capabilities of the MindLoongGPT 7B Parameter AI Model extend far beyond impressive tech demonstrations – they open up exciting possibilities across numerous industries and use cases. ?? Let's explore some of the most promising applications already being developed or tested:

Manufacturing and Warehouse Operations

In manufacturing environments, the flexibility offered by MindLoongGPT-controlled robots is revolutionizing production lines. Traditional industrial robots require extensive reprogramming when product specifications change, creating costly downtime. Humanoid robots powered by MindLoongGPT can be redirected to new tasks through simple verbal instructions.

A pilot program at an electronics assembly plant demonstrated this advantage when a production manager was able to instruct a robot to "temporarily help with packaging at station three instead of component insertion" during an unexpected staff shortage. The robot seamlessly switched tasks without any programming intervention, completing the new assignment with minimal guidance.

Warehouse operations benefit similarly from this flexibility. Robots can be instructed to reorganize storage systems, retrieve specific items, or adapt to changing inventory management strategies through natural conversation rather than complex reprogramming. This dramatically reduces the technical expertise required to manage robotic systems and allows for more agile responses to changing business needs.

Healthcare and Assistance

Perhaps the most touching applications of MindLoongGPT-controlled humanoid robots are emerging in healthcare and assistance contexts. Robots equipped with this technology are being tested as helpers for elderly individuals and people with mobility limitations, offering support with daily tasks while understanding nuanced requests.

In clinical trials at assisted living facilities, residents have successfully directed robots to "bring my blue pill from the bathroom cabinet and a glass of water," "help me stand up slowly," or "adjust my pillow to make it more comfortable." The natural language interface proves particularly valuable for elderly users who may struggle with complex digital interfaces or specialized commands.

In hospital settings, robots controlled by MindLoongGPT are being evaluated for tasks ranging from delivering medications to assisting with patient mobility. The system's ability to understand contextual instructions makes it well-suited to the dynamic healthcare environment, where needs can change rapidly and tasks often require careful adaptation to individual patients' conditions.

Disaster Response and Hazardous Environments

When human safety is at risk, remotely operated humanoid robots offer compelling advantages. MindLoongGPT significantly enhances these systems by allowing operators to issue complex instructions through natural language rather than controlling each movement manually.

During a simulated nuclear facility emergency response exercise, operators successfully directed a humanoid robot to "enter the contaminated area, locate the pressure gauge on the west wall, and report the reading," followed by "turn the emergency release valve one quarter turn counterclockwise." The robot navigated the environment, identified the relevant equipment, and performed precise manipulations based on these high-level instructions.

Similar applications are being developed for firefighting, chemical spill response, and search and rescue operations. The intuitive control interface allows specialists to focus on the emergency response strategy rather than the mechanics of robot operation, potentially saving crucial minutes in time-sensitive situations.

Research and Exploration

Scientific research in extreme environments – from deep ocean exploration to planetary missions – stands to benefit tremendously from MindLoongGPT-controlled robots. The ability to direct complex sampling procedures, equipment setup, or exploratory activities through natural language commands enables scientists to conduct sophisticated research remotely.

A marine biology team recently tested a waterproof humanoid robot controlled by MindLoongGPT for coral reef monitoring. Researchers were able to instruct the robot to "collect a small sample from the pale section of the coral formation at coordinates X,Y" and "measure water temperature and acidity at three different depths around the formation." The system successfully translated these requests into precise underwater movements and scientific procedures.

Space exploration represents another frontier where this technology shows immense promise. The communication delays involved in controlling robots on other planetary bodies make autonomous operation essential, but mission parameters often need to be adjusted as new discoveries emerge. MindLoongGPT offers a middle ground where astronauts or mission controllers can provide high-level guidance that the system translates into appropriate actions based on local conditions.

Training and Development Journey of the MindLoongGPT 7B Parameter AI Model

The creation of such a sophisticated AI system didn't happen overnight! ?? The development journey of MindLoongGPT represents a fascinating case study in how modern AI systems evolve from concept to revolutionary technology. Let's take a behind-the-scenes look at how this remarkable system was trained and refined.

The development team began with a foundation model architecture similar to other large language models but made critical modifications to optimize it for robotic control applications. The 7 billion parameter size was carefully chosen after extensive experimentation – larger models offered marginally better language understanding but introduced latency issues that compromised real-time control, while smaller models lacked the sophistication needed for complex reasoning about physical tasks.

The training process occurred in several distinct phases:

Phase 1: Foundation Training

The initial training focused on general language understanding and knowledge acquisition. The model was trained on a diverse corpus of text that included:

General knowledge texts covering physics, mechanics, and spatial reasoning

Detailed descriptions of objects, their properties, and how they interact

Procedural texts explaining how to perform various physical tasks

Dialogues featuring instructions and clarifications about physical activities

This foundation training provided MindLoongGPT with a robust understanding of language and concepts related to the physical world, creating the knowledge base necessary for later specialized training.

Phase 2: Simulation-Based Movement Training

The next phase involved connecting language understanding to physical movement through extensive simulation. The team created detailed virtual environments where simulated robots attempted to carry out instructions provided in natural language.

This phase employed reinforcement learning techniques, where the system received positive feedback when it correctly interpreted instructions and executed appropriate movements. Crucially, the simulations included realistic physics modeling that accounted for factors like gravity, friction, and the mechanical limitations of robotic systems.

The simulation environment allowed researchers to present the AI with millions of scenarios – far more than would be practical with physical robots – accelerating the learning process dramatically. The system encountered everything from basic movement challenges to complex manipulation tasks in varying environmental conditions.

Phase 3: Specialized Task Learning

With basic movement capabilities established, training shifted to specialized tasks requiring more sophisticated understanding and execution. These included:

Fine manipulation of various objects with different physical properties

Navigation through complex environments with obstacles

Interaction with human subjects, including safe handover of objects

Tool use for tasks ranging from simple (using a hammer) to complex (operating machinery)

This phase employed a combination of reinforcement learning and imitation learning, where the system observed human demonstrations of tasks and learned to replicate them. Motion capture technology allowed researchers to create a dataset of human movements that served as exemplars for the AI to study and emulate.

Phase 4: Real-World Transfer and Refinement

The final training phase addressed the critical "sim-to-real gap" – the challenge of transferring skills learned in simulation to physical robots operating in the real world. This phase involved deploying the model on actual humanoid robots and refining its performance through additional training with real-world feedback.

Researchers employed a technique called domain randomization during simulation training, where environmental parameters were randomly varied to help the model learn robust strategies that would transfer to real-world conditions. Even so, additional refinement was necessary to account for the subtleties of physical robot operation.

This phase also included extensive safety testing and the implementation of multiple failsafe mechanisms. The team developed a sophisticated monitoring system that continuously evaluates commands and planned movements, rejecting any that might pose safety risks or exceed the robot's physical capabilities.

Future Directions and Limitations of the MindLoongGPT 7B Parameter AI Model

As with any groundbreaking technology, MindLoongGPT represents not an endpoint but a stepping stone toward even more advanced systems. ?? Let's explore both the current limitations of the technology and the exciting future developments on the horizon.

Despite its impressive capabilities, MindLoongGPT faces several important limitations in its current form:

Technical Limitations

Physical Constraints: While the AI can generate sophisticated movement plans, it remains bound by the mechanical limitations of the robots it controls. Issues like power consumption, motor precision, and physical balance continue to constrain what's possible.

Sensory Processing: The system relies heavily on the quality and reliability of sensor data. In challenging conditions like poor lighting, reflective surfaces, or environments with significant electromagnetic interference, performance can degrade.

Novel Situation Handling: Though MindLoongGPT generalizes better than previous systems, it can still struggle with entirely novel scenarios that differ significantly from its training experiences.

Computational Requirements: Running the full 7B parameter model with real-time performance requires substantial computing resources, limiting deployment in smaller robots or resource-constrained environments.

Future Development Pathways

The research team behind MindLoongGPT has outlined several promising directions for future development:

Multimodal Enhancement

The next generation of the system aims to incorporate more sophisticated visual processing capabilities, allowing robots to understand not just verbal instructions but also demonstrations, gestures, and visual cues. Imagine showing a robot how to fold a shirt once and having it immediately understand and replicate the action.

This multimodal approach would create more intuitive human-robot interaction, particularly in collaborative settings where verbal communication might be impractical due to noise or other constraints.

Continuous Learning Architecture

Future versions of MindLoongGPT will likely incorporate more sophisticated continuous learning capabilities, allowing robots to improve their performance over time based on their experiences. Rather than requiring periodic retraining by developers, these systems would gradually refine their movement strategies and understanding through ongoing operation.

This approach mimics human motor learning, where we continuously improve our physical skills through practice and experience. Implementing this capability while maintaining system stability and safety represents a significant research challenge.

Collaborative Intelligence

An exciting direction involves developing systems where multiple MindLoongGPT-controlled robots can collaborate on complex tasks, sharing information and coordinating their actions through a distributed intelligence framework.

This could enable applications like coordinated construction projects, where multiple specialized robots work together under high-level human direction, each contributing different capabilities to achieve a common goal.

Ethical and Safety Frameworks

As these systems become more capable and autonomous, developing robust ethical frameworks and safety protocols becomes increasingly important. Future research will likely focus on implementing more sophisticated ethical reasoning capabilities and failsafe mechanisms to ensure that robots operate safely in human environments.

This includes developing better methods for robots to explain their decision-making processes, allowing humans to understand why a particular action was chosen and to provide appropriate feedback.

The Broader Impact

Beyond specific technical developments, the emergence of systems like MindLoongGPT raises important questions about the future relationship between humans and intelligent machines. As robots become more capable of understanding natural human communication and performing complex physical tasks, they will increasingly serve as extensions of human capability rather than merely automated tools.

This transition offers tremendous potential benefits in areas ranging from elder care to disaster response, but also requires thoughtful consideration of the economic and social implications. How will labor markets evolve as these technologies mature? What new skills will humans need to develop to work effectively alongside increasingly capable robotic systems?

The development team behind MindLoongGPT has emphasized the importance of human-centered design principles, focusing on creating technology that augments human capabilities rather than simply replacing human workers. This philosophy guides ongoing research and development efforts, with the goal of creating systems that enhance human potential rather than diminishing it.

As we stand at the beginning of this new era in human-robot interaction, MindLoongGPT represents not just a technical achievement but a glimpse into a future where the boundary between human intention and machine action becomes increasingly seamless. The journey from concept to current capability has been remarkable, but the road ahead promises even more extraordinary developments as this technology continues to evolve.