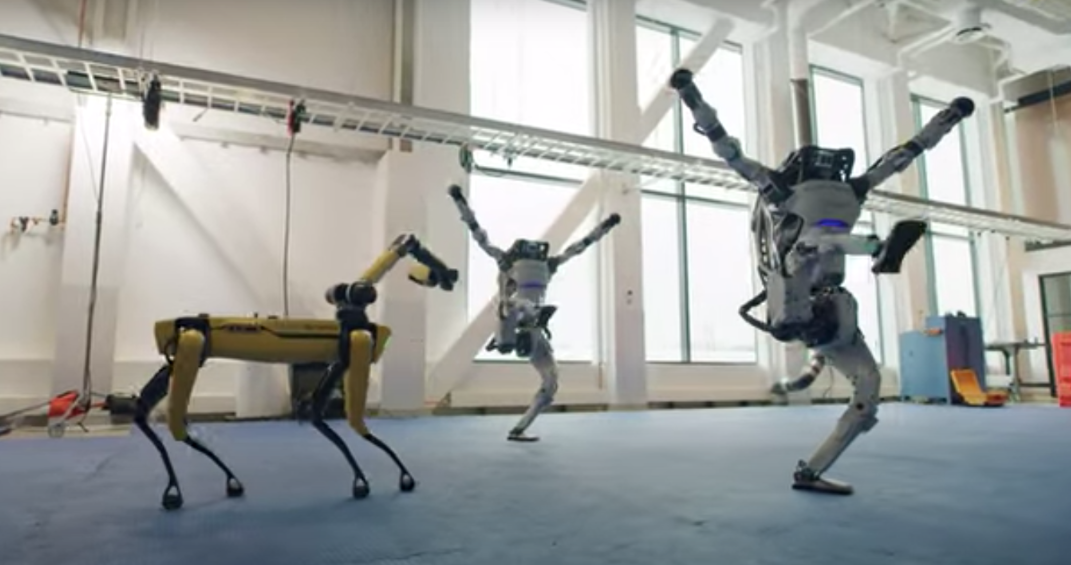

As humanoid robotics advance, the idea of AI robots coexisting with humans becomes increasingly tangible. Humanoid robots, designed to replicate human appearance and behavior, are making waves in various industries. However, the ethical challenges surrounding them—such as job displacement, privacy concerns, and emotional manipulation—demand careful consideration. In this article, we will explore these challenges using examples like Tesla's Optimus and Hanson Robotics' Sophia.

Job Displacement: A Growing Concern in Humanoid Robotics

One of the most pressing ethical issues in humanoid robotics is job displacement. As humanoid robots like Tesla's Optimus become more advanced, there is a real concern that they will replace human workers in a variety of industries. The International Federation of Robotics (IFR) conducted a 2023 survey, revealing that 75% of respondents feared job loss due to automation. This statistic highlights the anxiety many people feel as AI robots become more capable.

Tesla's Optimus robot, designed to assist in factories, is a prime example of humanoid robotics in action. While it can improve production efficiency, its widespread adoption could lead to the loss of jobs for thousands of workers. The societal impact of such automation is profound, as many jobs are at risk of being replaced by these advanced robots.

Experts argue that while humanoid robots like Optimus can drive economic growth, they must be integrated into society in a way that does not leave workers behind. Some propose solutions such as retraining programs and universal basic income to mitigate the negative effects of job displacement.

Privacy Concerns: The Dangers of Data Collection

Another significant concern with humanoid robots is the potential invasion of privacy. These robots, equipped with advanced AI, require large amounts of data to operate effectively. This can include personal information, facial recognition, and emotional responses, raising serious questions about data security and privacy. If not regulated, humanoid robots could become tools for surveillance rather than assistance.

The EU’s 2024 AI Ethics Guidelines stress the need for transparency and strict regulations in AI and humanoid robotics. These guidelines emphasize the importance of ensuring that robots like Sophia do not compromise individuals' privacy or personal freedoms.

Emotional Manipulation: The Ethical Dilemma

Humanoid robots are designed to interact with humans on an emotional level, but this can lead to potential manipulation. Robots like Sophia are programmed to recognize human emotions and respond accordingly. While this can make them useful in various fields, such as healthcare and customer service, it also raises ethical concerns about manipulating emotions for commercial gain or emotional control.

In healthcare, humanoid robots can provide companionship to the elderly, using emotional cues to comfort and engage with them. While this has significant benefits, there is also a concern that these robots might manipulate vulnerable individuals for financial or personal gain, which complicates the ethics of their use.

Experts suggest that while humanoid robots can be valuable companions, their emotional programming should be transparent and governed by ethical standards. This would ensure that robots serve humanity’s best interests without crossing ethical boundaries.

Summary: Can AI Robots Truly Coexist with Humans?

The integration of humanoid robots into our society brings both tremendous potential and serious ethical concerns. While humanoid robotics can enhance efficiency and improve lives, challenges like job displacement, privacy invasion, and emotional manipulation need to be addressed. As we continue to develop and deploy these robots, it is crucial that we balance innovation with ethics to ensure a harmonious coexistence between humans and AI robots