Abstract

Qwen3, the latest open-source large language model from Alibaba's Tongyi Lab, has fundamentally redefined the standards for open-source AI through its groundbreaking technological innovations and exceptional performance metrics. This comprehensive analysis examines Qwen3's technical specifications, innovative architecture, performance advantages, and application potential, providing readers with an in-depth understanding of this revolutionary AI model.

Technological Breakthroughs of Qwen3

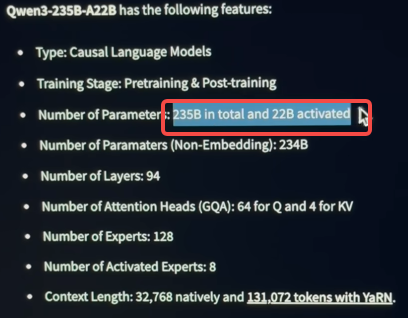

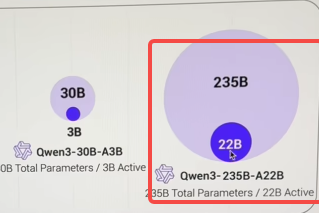

Perfect Balance Between Parameter Scale and Efficiency

Qwen3 boasts an impressive 235 billion parameters, yet through its innovative mixed-thrust architecture, it requires only 22 billion activated parameters during operation, dramatically reducing computational resource requirements. This efficient design enables Qwen3 to achieve full performance using just four H20 GPUs, with memory consumption at merely one-third of comparable models. This advancement effectively "halves" computational costs, substantially lowering enterprise deployment barriers.

This technological progress aligns with the findings of Sun et al. (2025), whose research indicates that smaller large language models can provide superior computational efficiency and deployment flexibility while maintaining high performance. Qwen3 achieves the perfect integration of large model capabilities with small model efficiency through its innovative architecture.

Pioneering Mixed-Thrust Architecture

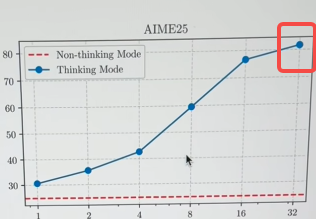

Qwen3's most revolutionary innovation is its pioneering mixed-thrust architecture, which integrates "fast thinking" and "slow thinking" capabilities within a single model. This means the model can respond with exceptional speed when facing simple questions, while adopting a more deliberate thinking process when handling complex reasoning tasks. This dual-mode operation simultaneously reduces energy consumption and enhances reasoning accuracy.

This architectural design corresponds with the research findings of Miliani et al. (2025) regarding causal reasoning capabilities in large language models. Their study demonstrates that even top-tier models struggle to achieve 80% accuracy in complex reasoning tasks. Qwen3's mixed-thrust architecture specifically addresses this challenge by implementing a "slow thinking" mode to improve accuracy in complex reasoning scenarios.

Performance Metrics of Qwen3

Mathematical Reasoning Capabilities

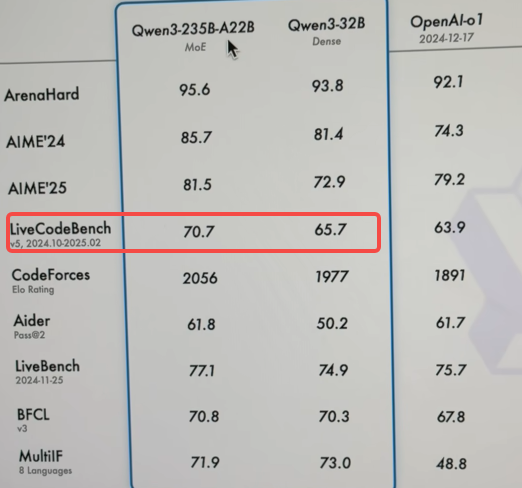

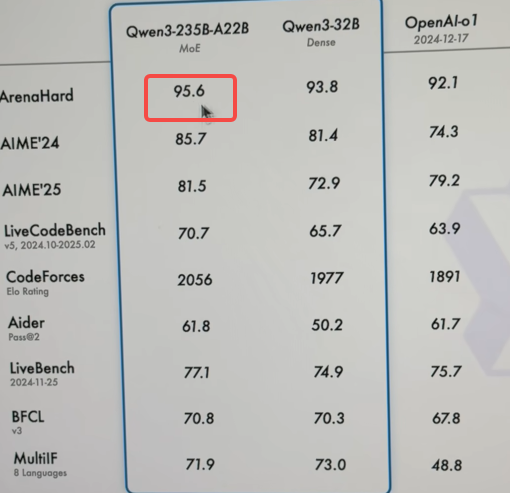

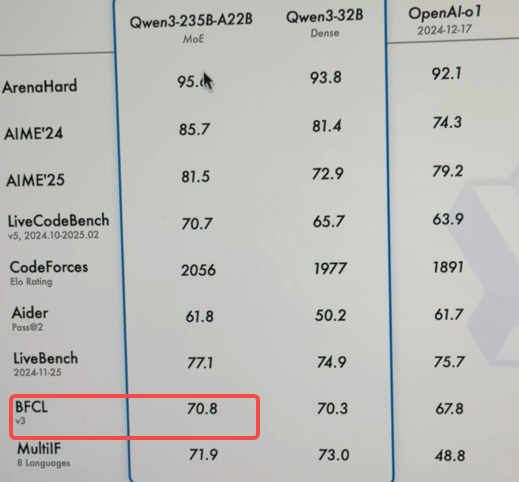

In the globally recognized AIME25 mathematical reasoning test, Qwen3 achieved an astonishing score of 81.5, substantially outperforming all other domestic and international open-source models. This score validates Qwen3's exceptional capabilities in handling high-difficulty logical reasoning and mathematical problems, providing robust support for scientific research and educational applications.

Code Generation Proficiency

In the Livebench code capability assessment, Qwen3 broke through the 70-point threshold, reaching a level comparable to premium commercial models. This indicates Qwen3's strong application value in software development, automated programming, and related fields.

Human Preference Alignment

In the Arena Hard human preference alignment evaluation, Qwen3 secured the world's top position with a remarkable score of 95.6, demonstrating its excellence in understanding and fulfilling human requirements. This achievement resonates with the research of Cao et al. (2025), which evaluated the performance of Qwen series models in medical patient education, confirming their effectiveness in human-machine interaction within professional domains, particularly regarding readability, utility, and satisfaction metrics.

Tool Utilization Capabilities

In the BFCL evaluation, which tests tool utilization abilities, Qwen3 established a new high score of 70.76, indicating its enhanced precision and efficiency as an AI Agent autonomously employing tools. This characteristic aligns with the research findings of Lu et al. (2024) on multimodal large language models, which demonstrated that Qwen series models excel in complex tasks such as vision-language fusion, surpassing open-source models of equivalent scale.

Multimodal Capabilities of Qwen3

As a comprehensive AI system, Qwen3 excels not only in pure text processing but also demonstrates significant advantages in multimodal capabilities. Research by Lu et al. (2024) indicates that Qwen-VL series models perform exceptionally well in vision-language fusion, even outperforming certain proprietary models. This gives Qwen3 distinct advantages in image comprehension, visual reasoning, and related tasks.

Comparison with Competitive Models

Qwen3's performance directly surpasses top-tier models such as DeepSeek R1 and OpenAI's O1, with particularly notable advantages in mathematical reasoning, code generation, and human preference alignment. In the medical application domain, research by Cao et al. (2025) compared Qwen with Baichuan 2, ChatGPT-4.0, and PaLM 2, revealing that while different models exhibit various strengths across different dimensions, Qwen series models demonstrate competitive overall performance.

Application Prospects and Accessibility

One of Qwen3's greatest advantages is its low-barrier accessibility, allowing users to directly experience all functions of this premium model through the Tongyi Qianwen APP. This convenient access method significantly reduces the usage threshold for advanced AI technology, providing equal technological access opportunities for individual users, researchers, and enterprises.

Regarding vertical domain applications, Qwen3 demonstrates extensive potential. Research by Cao et al. (2025) confirms the application potential of Qwen series models in medical education, while its exceptional mathematical reasoning and code generation capabilities make it particularly valuable in scientific research, education, and software development.

Conclusion

As the latest masterpiece from Alibaba's Tongyi Lab, Qwen3 has successfully redefined the ceiling for open-source large language models through its innovative mixed-thrust architecture, efficient parameter design, and comprehensive capability enhancements. Its exceptional performance in mathematical reasoning, code generation, human preference alignment, and tool utilization, combined with its low-barrier accessibility, establishes it as one of the most competitive and valuable open-source large language models in the current AI landscape.

As AI technology continues to evolve and application scenarios expand, Qwen3 is poised to play a crucial role across multiple domains including scientific research, education, healthcare, and software development, driving artificial intelligence technology to serve human society more extensively and profoundly.